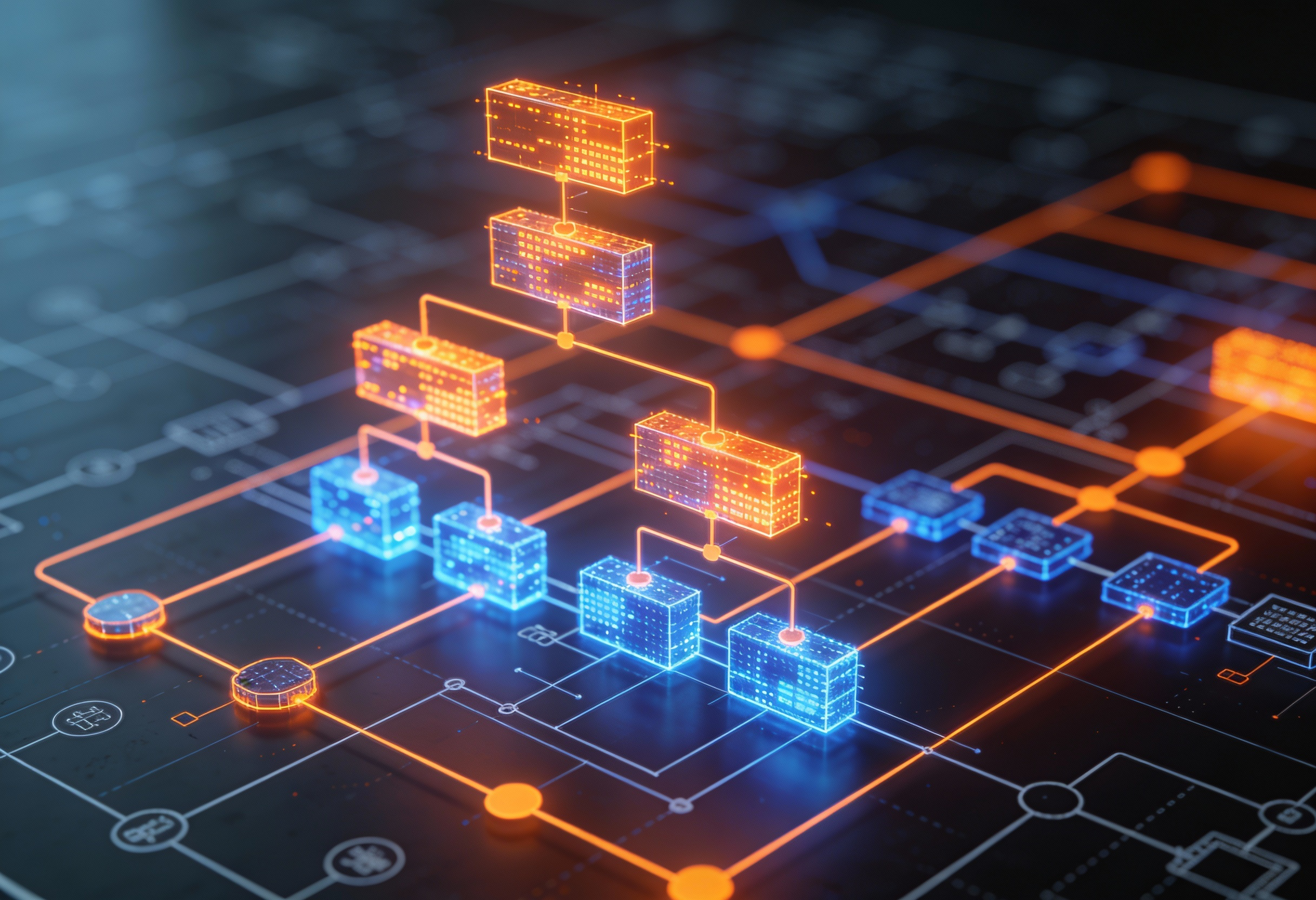

AI-Powered Patent & Sequence Intelligence Platform

Azati developed an AI-driven platform that enables the client to intelligently analyze patents and biological sequences. The solution automates search, annotation, and structuring of large-scale datasets, helping researchers and IP analysts gain actionable insights faster and more accurately.

- 50M+documents and sequences processed

- 72%reduction in manual work via AI

- 91%search accuracy and result relevance