Get 100% matched

IT Outsourcing Experts

Accelerate your roadmap with our top developers, architects, designers, and project managers. As a leading IT outsourcing company, we provide custom software development services and IT outsourcing solutions that power digital transformation. Our expertise and commitment to excellence earn the trust of clients worldwide.

For Customers

Drive your business forward with custom technology solutions that enhance efficiency, streamline processes, and enable digital transformation.

For Career

Become part of a team that values your expertise, encourages innovation, and supports your career development through challenging IT projects.

Refer & Earn

Share our technology services with your network and unlock special rewards while helping others access trusted IT expertise.

Tailored IT Solutions For Your Business

Custom Software

Engineering

Azati provides custom software development services, delivering scalable web platforms, mobile applications, and enterprise solutions. We focus on creating robust solutions that align with your business objectives and ensure regulatory compliance.

Advanced AI & Machine

Learning

Our team delivers AI development, machine learning model training, and predictive analytics to drive automation, innovation, and smart decision-making. We help businesses achieve cost savings through intelligent automation while maintaining data security standards.

Data Science

& DWH

We offer data science services, big data analytics, data engineering, and cloud data warehouse solutions for real-time insights and reporting. Our it outsourcing services include comprehensive disaster recovery planning and secure data management strategies.

DevOps and System

Administration

Azati's DevOps engineers automate CI/CD pipelines, manage cloud infrastructure, and provide it solutions consulting to optimize deployment workflows. Our outsource it service model ensures seamless integration with your existing business processes.

Quality

Assurance

Our QA experts ensure software quality through automated testing, manual QA, performance testing, and bug tracking across all platforms. We maintain rigorous service levels to guarantee the reliability of every developed solution.

UX/UI

Design

We create modern UI/UX design for mobile apps, SaaS products, and enterprise platforms focused on usability, accessibility, and brand consistency. Our software engineering approach prioritizes user experience alongside technical excellence.

Industries We Cover with Software Development Services

Tell Us About Your Project

Schedule a callInsights from Our Tech Blog

AI in Customer Experience 2026: Complete CX & AI Guide

How AI Handles Holiday Traffic Surges

Expert Systems vs AI: Complete 2026 Guide | Differences Explained

AI-Powered Progressive Delivery: Smart Feature Flags in 2026

Top 10 LLM Development Companies in 2026

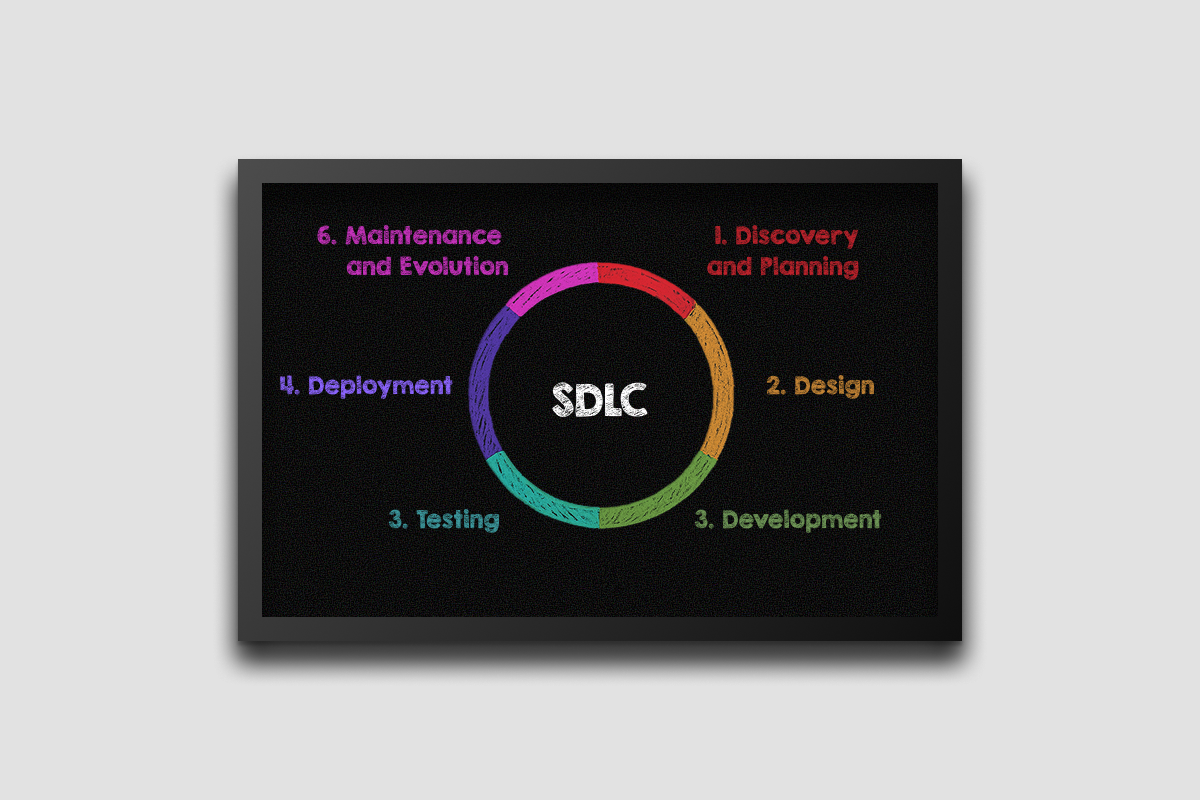

From Discovery to Deployment: Understanding the Custom Software Development Lifecycle

Recommendation Systems: Benefits And Development Process Issues

Enterprise Software Development: Streamlining Complex Business Workflows

Custom Web Application Development: How to Build Scalable Solutions

Custom Software Engineering Services: A Complete Guide to Building Tailored Software Solutions

How Artificial Intelligence Is Transforming Industries

AI-Powered NLP in Healthcare: 7 Game-Changing Applications Transforming Patient Care in 2025

Why Choose Us for IT Outsourcing

Fast Project &

Team Setup

As a leading IT outsourcing company, Azati resolves team setup and organizational issues within one day, enabling a fast project start. Our streamlined onboarding lets you focus on core business while we handle the technical implementation.

Stress-free

Delivery

Our development team brings your ideas to life efficiently by using optimal technologies and proven methodologies to deliver high-quality products. We guarantee transparent service level agreements and maintain strict service levels throughout project lifecycle.

Business Goals

Understanding

We are technical experts committed to achieving your business objectives through close collaboration and tailored solutions. Our consulting services extend beyond development to include strategic planning and digital transformation initiatives.

Large Talent

Pool

Azati provides access to highly skilled, responsible, and proactive specialists and engineers who deliver effective and reliable software solutions. Our staff augmentation model offers flexible engagement options for any project scope.

Reasonable

Pricing

Our expertise combined with competitive pricing makes us a trusted strategic partner for development services and consulting services. We deliver significant cost savings compared to in-house development while maintaining superior quality standards.

Cooperation

Transparency

We ensure a transparent development process with clear service level agreements, helping your ideas evolve clearly and successfully from concept to completion. Our approach includes regular case studies and performance metrics reporting.

Remote Work

Expertise

With over 20 years of remote work experience, we have refined our skills and optimized business processes to support effective workflows. Our it outsourcing service model is built on proven methodologies and secure collaboration frameworks.

Outstaffing

Options

Azati offers flexible it outsourcing solutions including staff augmentation, providing both full dedicated teams and individual specialists to strengthen your on-site or remote team. This outsource it service approach gives you complete control over team composition.

What Clients Say About Our IT Outsourcing Services

FAQ

Azati offers comprehensive it outsourcing solutions including custom software development services, AI/ML engineering, data science, DevOps, QA testing, and UX/UI design. Our software development services are tailored to meet specific business process requirements while ensuring data security and regulatory compliance. We work as one of the trusted it outsourcing companies providing both full project delivery and staff augmentation options.

We implement industry-leading data security protocols, maintain strict regulatory compliance standards, and include comprehensive disaster recovery plans in all engagements. Our it outsourcing service framework includes signed service level agreements that clearly define security responsibilities, encryption standards, and access controls to protect your intellectual property and sensitive business data.

Partnering with Azati as your it outsourcing company delivers significant cost savings by eliminating recruitment expenses, reducing infrastructure costs, and providing access to specialized talent without full-time commitments. Our competitive pricing model for custom software development services allows you to focus on core business activities while achieving up to 40% cost reduction compared to maintaining an in-house development team.

As one of the most agile it outsourcing companies, we can assemble your team and begin development within one business day. Our streamlined onboarding process, clear service level agreements, and established business processes ensure rapid project initiation without compromising quality or security standards.

Our software development services span across 15+ industries including Finance, Healthcare (Life Sciences), E-learning, Retail, Real Estate, and more. We have extensive case studies demonstrating successful it outsourcing solutions across diverse sectors. Our consulting services are informed by deep domain knowledge and industry-specific regulatory compliance requirements.

Yes, our it outsourcing service includes comprehensive post-launch support, maintenance, and enhancement services. We maintain agreed service levels through continued collaboration, offering everything from bug fixes to feature development. Our it solutions consulting extends throughout the entire product lifecycle, ensuring your developed solution evolves with your business needs.

We maintain full transparency through established service level agreements, regular status updates, and dedicated project managers. Our proven business processes include daily standups, sprint reviews, and comprehensive reporting. As experienced outsourcing firms, we adapt to your preferred communication tools and methodologies while maintaining clear service levels.

Absolutely. Our staff augmentation model allows you to extend your team with specialized experts for specific projects or long-term engagements. This outsource it service approach provides flexibility to scale your team up or down based on project demands while maintaining full control over development priorities and processes.