In today’s hypercompetitive software marketplace, leaders are under constant pressure to deliver faster, more reliable releases without ballooning budgets. Coding assistants — powered by Large Language Models — promise to be a silver bullet, reducing hours of manual coding to mere minutes and fueling leaps in productivity. But while the upside appears enormous, the risks of flawed output, over-reliance, and lost expertise should keep every executive awake at night.

This article will explore the substantial value potential of coding assistants and reveal some lurking dangers beneath the surface.

The Promised Value of Coding Assistants: Speed and Cost Savings

Tech giants have claimed that coding assistants cut development time by 30% to 40%, sometimes more, in tasks like writing test cases, scaffolding boilerplate code, and speeding up routine refactors. For a business investing, say,

So, it is no wonder that 74% of companies surveyed by a leading consultancy in 2023 reported adopting or actively experimenting with coding assistants. From the bottom-line standpoint, a well-trained LLM can rapidly produce code templates based on repeated patterns. Think of it as an automated junior developer: functioning best on repetitive tasks such as reusing known frameworks, generating skeleton files, or building standardized UI components.

The immediate payoff is that developers can dedicate fewer hours to boilerplate or rote tasks. Businesses can often put them on more strategic, revenue-focused projects — where code quality truly differentiates the enterprise.

The Ticking Time Bomb: Inaccuracy and Rework

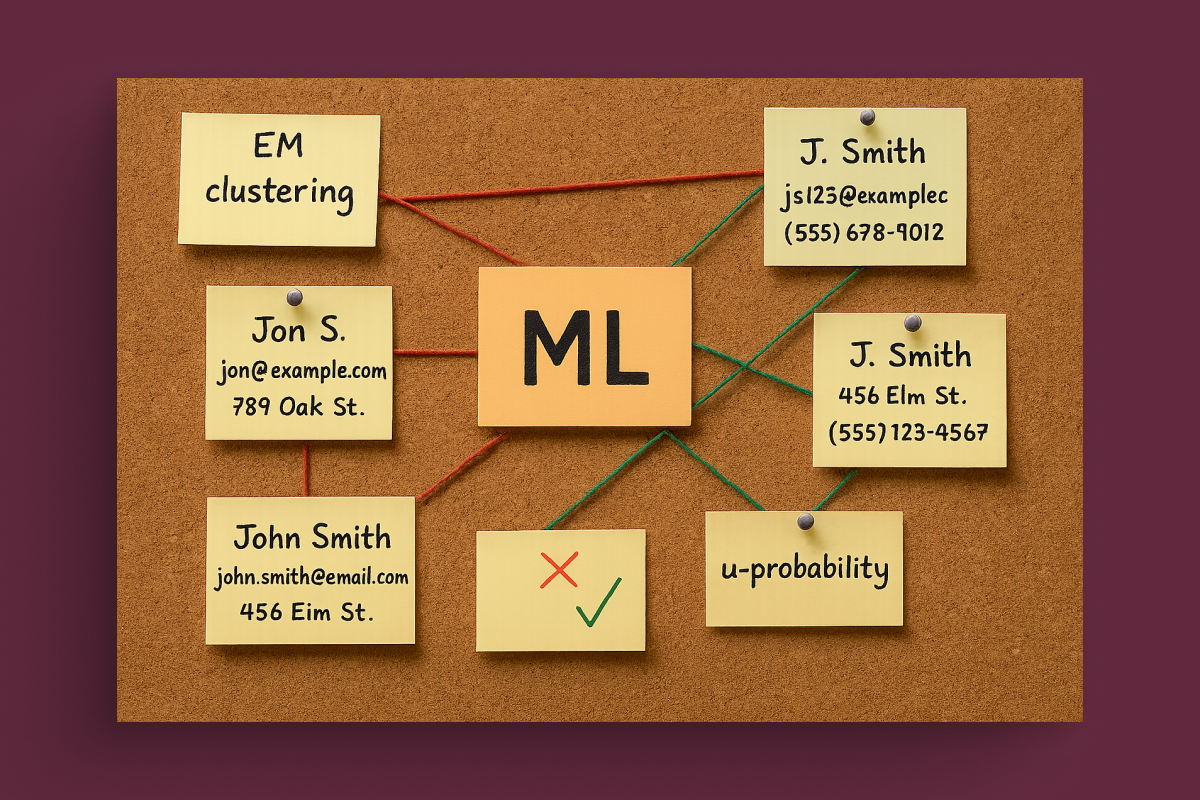

However, the dream scenario can quickly lead to nightmares if companies treat coding assistants like a drop-in substitute for fully qualified engineers. LLMs are not all-knowing. They do not truly “understand” the project the same way a seasoned developer does; rather, they make text-based predictions using patterns observed in thousands of public code repositories and documentation sets. As a result, more nuanced or specialized tasks — like implementing custom design systems, bridging multiple frameworks, or building complex integrations— can quickly degrade into erroneous suggestions.

In one reported case, a lead developer asked for help styling a website header component using a popular framework. The model repeatedly confused entire version differences — mixing advanced Vue 3 features with Vue 2 syntax — causing the developer to waste half a day chasing phantom CSS directives that did not exist.

Over multiple sessions, the developer noticed that even clarifying prompts failed to elicit a correct approach. Wasted hours equal wasted money; if too many engineers lose time cleaning up AI errors, the hoped-for savings vanish.

There is also the question of brand and reliability risk. Every QA manager aims for robust testing, but if an LLM suggests “shortcut” test coverage (or hallucinates a nonexisting function parameter), it can sow latent defects. Multiply that by thousands of lines of code, merged across an entire enterprise platform. Failing to detect these issues quickly can force an expensive round of rework right before release — or, worse, degrade the reputation of your product.

Balancing Act: Cultivating Human Expertise

The fear factor goes beyond immediate costs: some business leaders worry that junior engineers will grow dependent on coding assistants at the expense of fundamental programming practices. Terms like “code literacy” exist for a reason — overreliance on an LLM that frequently guesses or invents solutions can degrade a team’s technical proficiency.

Onboarding new hires is already expensive. According to various HR metrics, it can cost up to $60k just to integrate a single junior developer, factoring in training and ramp-up time. With the prevalence of AI-suggested solutions that “spoon-feed” code, novices might skip the critical process of learning how to debug or interpret official documentation. In the worst case, that knowledge gap surfaces at a critical juncture where the AI truly flounders, leaving the team ill-equipped to fix issues quickly.

Where to Go from Here

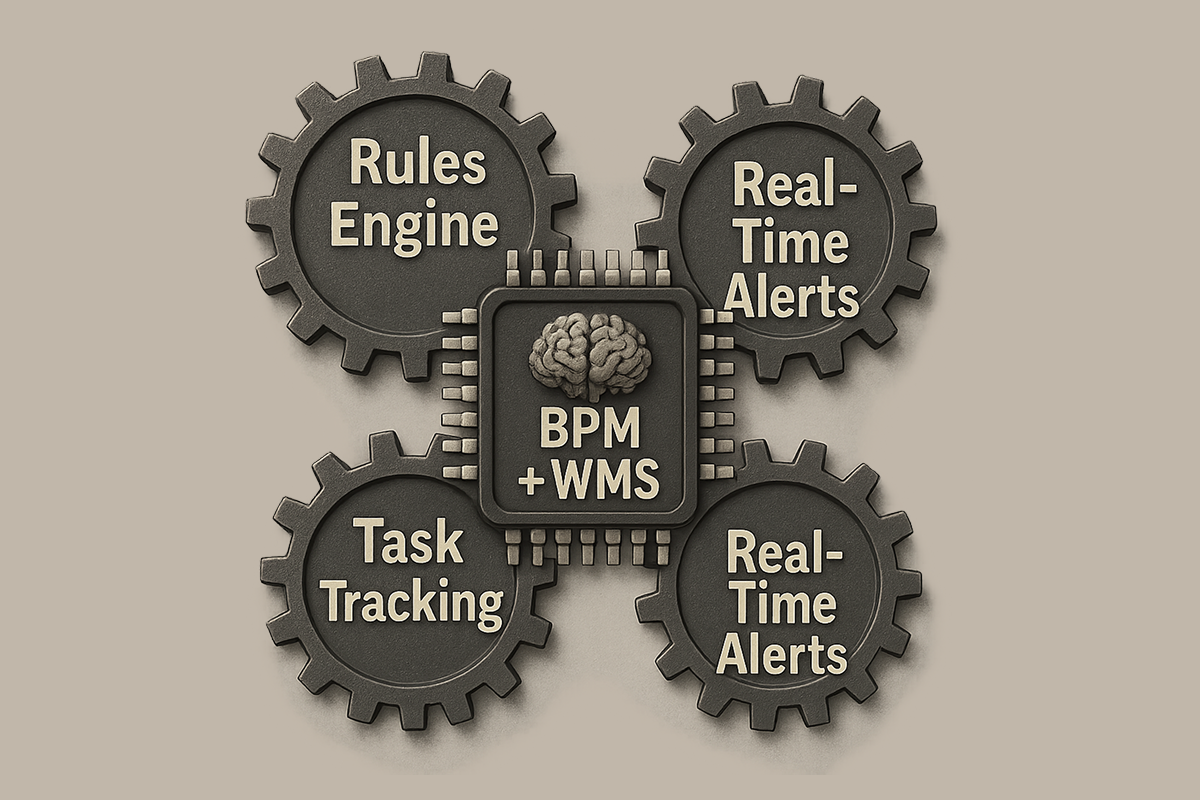

At Azati, we have seen clients who dramatically boosted their development velocity while still maintaining top-tier quality — provided they adopt responsible practices. Integrating an LLM can unlock productivity, but it requires clear guidelines and robust validation steps.

- Implement a quality gate: Ensure every piece of generated code undergoes code review by senior staff and thorough testing. If an LLM’s suggestions fail part of your pipeline, revise or discard them.

- Encourage domain expertise: Cultivate an environment where junior developers still learn frameworks “the hard way.” Mandating some tasks — like critical architectural decisions — remains solely the province of experienced engineers.

- Choose your LLM (and plugin tools) wisely: Different coding assistants excel at different tasks. Investing in specialized solutions, such as those with domain-trained data or advanced context windows, can pay for itself in fewer errors.

- Monitor the ROI: Track effort saved vs. time spent reworking flawed code. If your error rate climbs, it may be necessary to adjust prompts, re-train staff, or pause usage.

Fear of Missing Out vs. Fear of Failure

It is easy to get swept away by the triumphant marketing claims that LLM-based coding assistants can perform “10x faster than human engineers”. But it is equally important to heed the real-world stories from developers whose 30-minute back-and-forth with an LLM yielded no net progress. The promise is similar to past leaps in automation: certain tasks will become drastically simpler, but only under watchful oversight by skillful professionals.

Pushing for full-scale reliance on coding assistants without building knowledgeable teams around them can create illusions of efficiency. When the illusions fail, the rework and lost momentum can be far more costly than if the team had just tackled the difficult code from scratch. On the other hand, ignoring the technology altogether risks missing out on the wave of automation your competitors may embrace.

Striking the right balance is the challenge. With the proper oversight, LLMs can provide immediate ROI, improve time-to-market, and reduce developer fatigue. Without it, watch out — complex code can slip into chaos, and those “savings” can morph into a serious liability that your CFO and your reputation can ill-afford.

If your organization is preparing to harness coding assistants or needs help mitigating the risks, the time to act is now. The future rewards bold leaders who adopt transformative technologies responsibly. But no one wants to be the cautionary tale for letting AI code run rampant. By framing a measured strategy, you can ensure coding assistants become your productivity hero rather than your next costly mistake.

Ready to harness the power of coding assistants without the risks? Connect with our experts to develop a secure and effective implementation strategy today.