Short answer: yes. Long answer: keep reading - because the cost of ignoring them is already eating market share.

The silent spread of large-language-model (LLM) technology inside enterprises looks remarkably like the early cloud years. One moment everyone treated AWS as a toy; two quarters later, CAPEX budgets collapsed by double digits.

We are witnessing the same inflection point with generative AI, only faster. At Azati we measured - in real customer environments - how LLM-powered workflows reshape head-count allocation, defect rates, release velocity and even close-rate in B2B sales.

The numbers demand attention:

- 37% drop-in average engineering hours per feature after six weeks of selective LLM augmentation.

- 22% reduction in post-release defects when code reviews are reinforced by model-driven static checks.

- 11-to-1 ROI (year one) on a multilingual support bot that cost under $85k to build and push to production.

These are not theoretical. They are averaged across five mid-market clients in SaaS, fintech and logistics in 2024.

Example 1: Human Resource automation

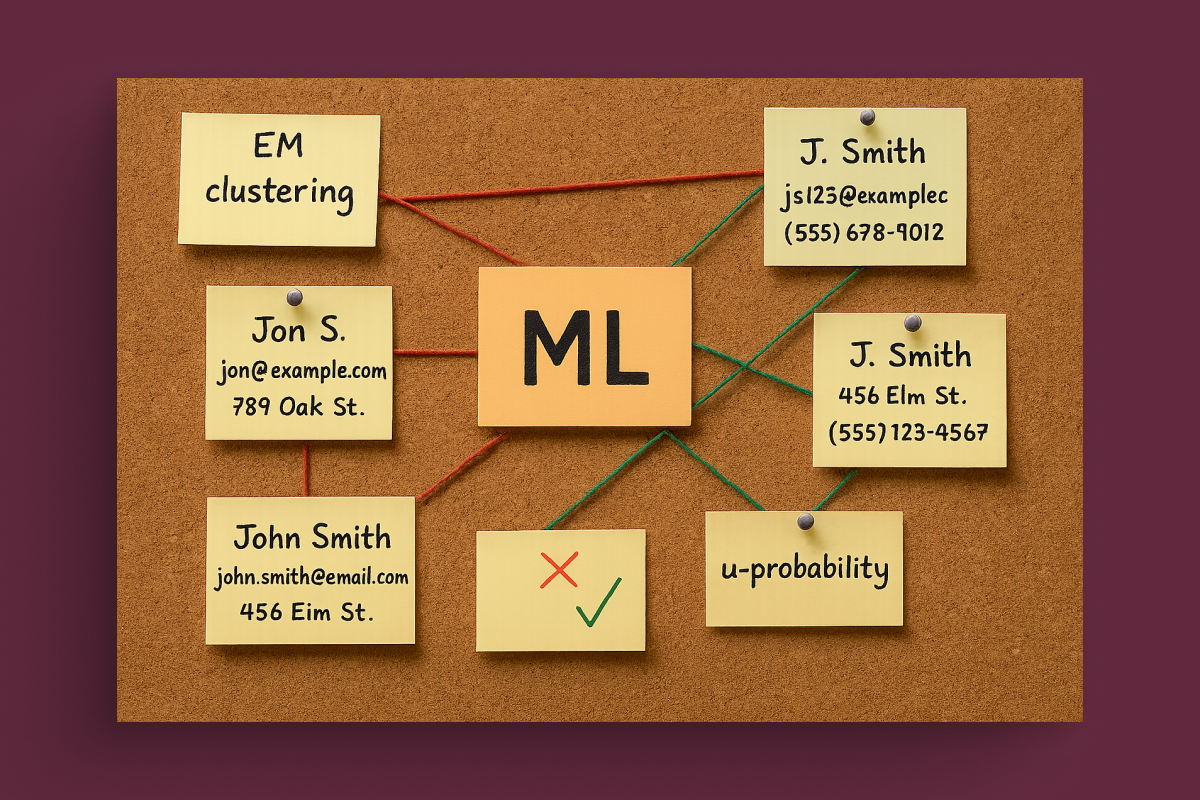

Leveraging a bespoke Large Language Model fine-tuned on thousands of engineer CVs and project post-mortems, our AI-driven talent-matching system automatically parses the entire resume database, understands each candidate’s skills, tech stack, domain expertise, and project outcomes, and then cross-references this knowledge with the specific requirements of any new initiative.

Within seconds, it ranks the most compatible specialists, explains its reasoning in clear, human-readable language - highlighting relevant achievements, tool proficiencies, and cultural fit - and can even assemble a balanced, end-to-end delivery team covering all critical roles. The result is a data-backed, transparent hiring recommendation that slashes search time, eliminates bias, and raises project success rates.

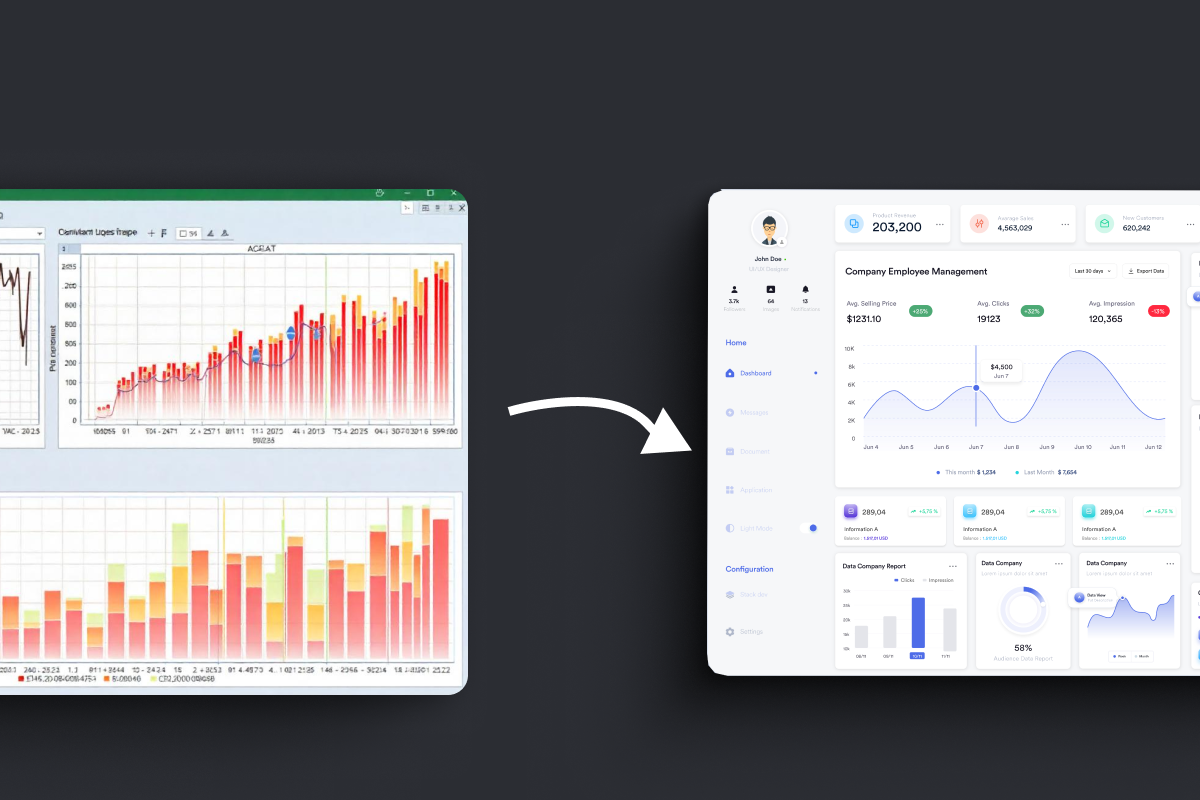

Example 2: Old code to new code

A fintech client running a 900-k-line old code estate wanted static analysis but balked at six man-months of manual annotations. A 28-B-parameter model fine-tuned on their codebase generated 88 % of the types in three hours of compute time and one engineer’s oversight.

Example 3: Legal paperwork assistant

Powered by an internal Large Language Model, our Contract Review Assistant ingests NDAs, MSAs, SoWs, and other agreements, automatically flags clauses that deviate from our playbook on liability, IP ownership, data protection, and payment terms, and highlights missing provisions required by regional regulations or corporate policy.

The tool delivers an annotated, side-by-side comparison against our approved language, cites the exact passages at issue, and proposes redlined alternatives that legal can accept or further edit in a click - compressing hours of manual scrutiny into minutes while reinforcing compliance and lowering exposure to contractual risk.

From two-lunch-break ideas to production assets

The magic here is not supernatural intelligence; it is speed. Tasks that once required a half-day deep dive now finish before the pizza gets cold. Inside a venture-backed e-commerce startup we saw engineers burn nightly hours automating a server-driven UI form builder. An LLM-generated script fixed the quirks in under three hours. Velocity wins talent loyalty; talent retention saves recruiting fees. Everyone smiles - except competitors.

“But LLMs are wrong 30-40 % of the time”

True, but irrelevant. Tools yield value only when coupled with human judgment. In practice, we see two defensive layers:

Confidence-scored responses: the model assigns uncertainty; anything under 0.8 funnels to a human gatekeeper.

Evaluators in the loop: lightweight automated tests enforce schema, security rules, and PII redaction before results flow downstream.

With those guards, the cost of error stays lower than the opportunity cost of inactivity.

The strategic threat of sitting still

McKinsey predicts generative AI could inject $4 trillion into global GDP, yet their survey shows 60 % of executives remain in “watch mode.” Watch mode quietly forfeits market share. Consider customer support: companies that deployed multilingual LLM agents report an average 20 % higher CSAT and 15 % lower churn within six months. How many CFOs can stomach a 15-point leak while they “experiment”?

What about data security?

Deployment patterns have evolved. Instead of shipping sensitive code to a public API, customers fine-tune distilled models behind a VPC. Techniques such as retrieval-augmented generation (RAG) allow the model to remain ignorant of raw production data. Encryption at rest, role-based access, and immutable logging make the stack SOC2-friendly. In many cases, the risk is lower than offshore contractors using personal laptops.

The investment blueprint

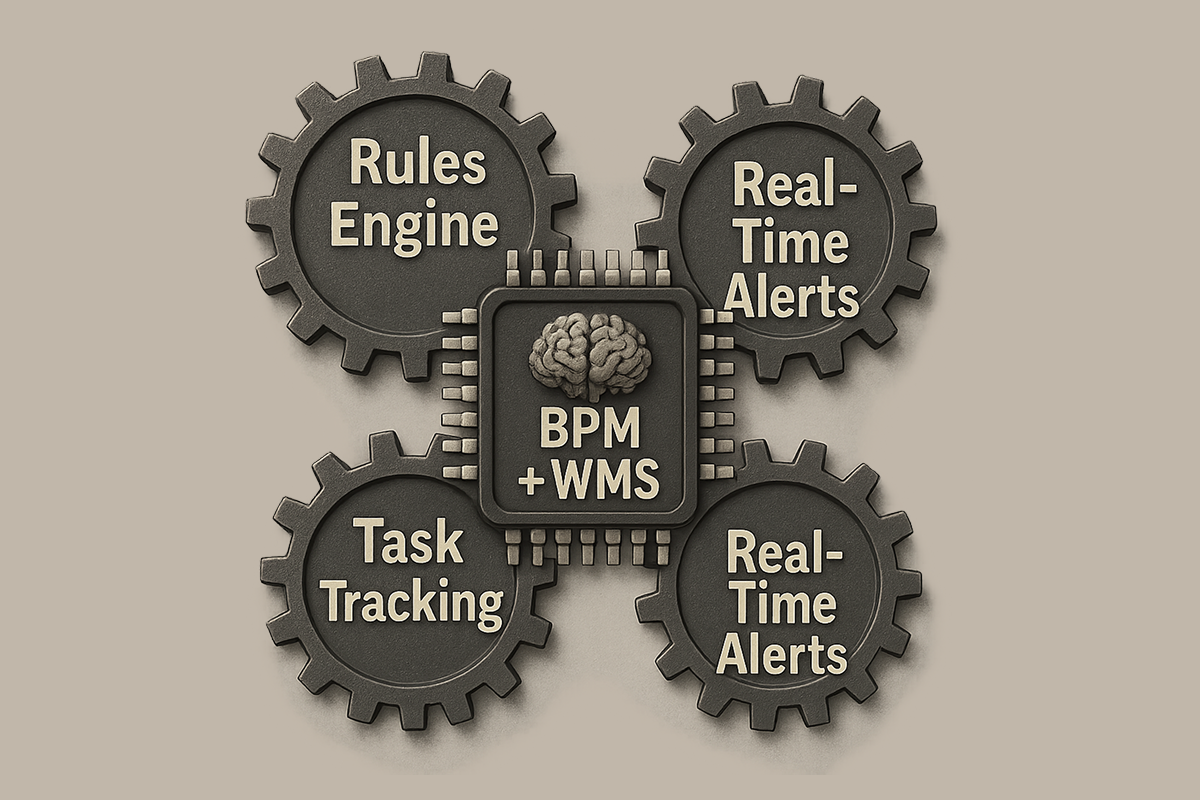

- Identify friction: onboarding churn, translation backlog, legacy refactor headaches. Quantify hours lost.

- Pick a narrow win: documentation sync, type annotation, support agent, analytic chatbot. Target a 4-to-1 ROI within 60 days.

- Wrap guardrails: automated tests, hallucination filters, audit logs.

- Measure relentlessly: cycle time, defects, customer conversions. Then iterate.

The window to secure early-mover advantage rarely stays open. Cloud adoption took a decade; LLM adoption is tracking at 3–4 years tops. Customers, shareholders, and regulators will not wait.

Feel the pressure? Good. It means you still have time to act - barely.

Azati has delivered more than 30 production LLM integrations since 2023. If you want the next success story to be yours, fill in the contact-us form and we will show you where the numbers hide in your backlog.