Many enterprises arrive at the same place: a clever prototype that extracts structured data from PDFs, then a tangle of AI microservices, batching frameworks, and cloud functions that nobody can run locally, no test suite to measure LLM accuracy, and a model that hallucinates just enough to erode trust. Operational costs spike, development cycles stretch, and confidence in the large language model pipeline fades.

Azati approaches these AI pipeline situations with a single rule: make quality measurable before you try to make it better. We replace meetings with an execution loop centered on facts. The loop starts by defining a shared contract of truth in a format everyone can see and edit.

In practice, that is a tabular ground truth that the entire team uses:

- Client experts supply expected values.

- An evaluation team curates and verifies examples, especially the hard ones.

- Integration prepares downstream analytics.

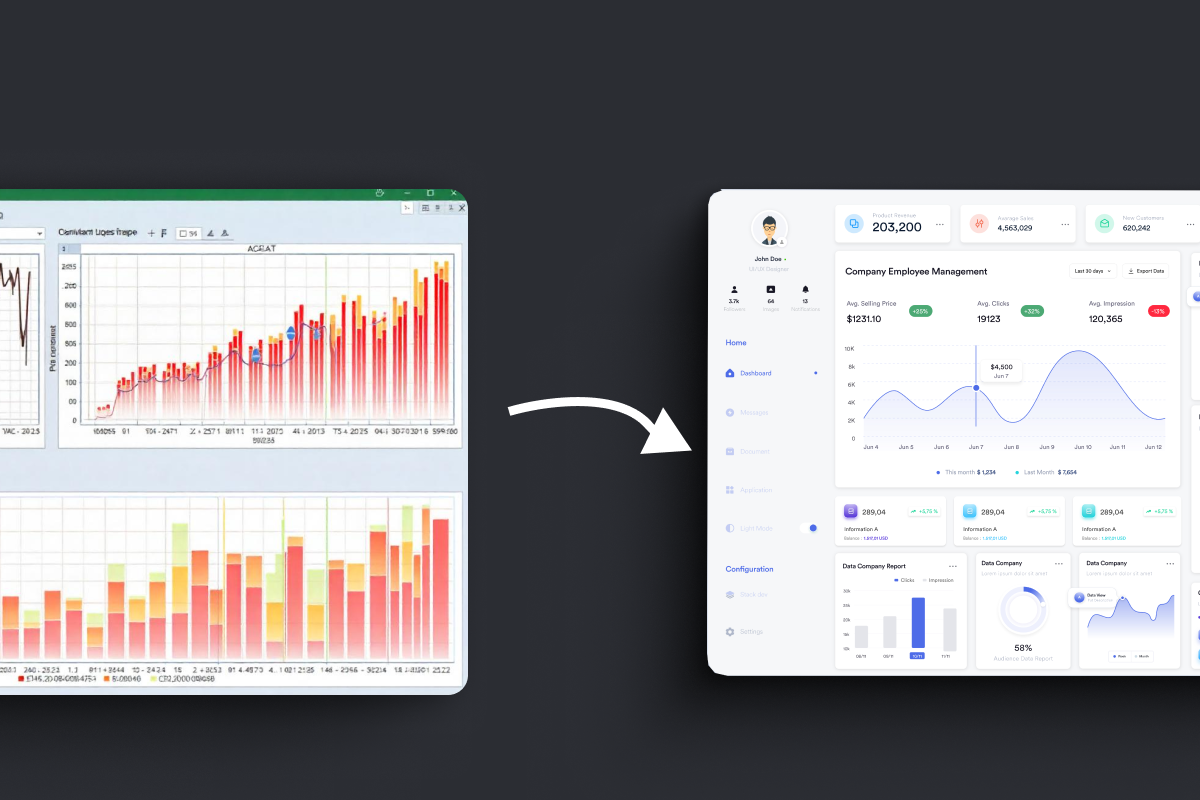

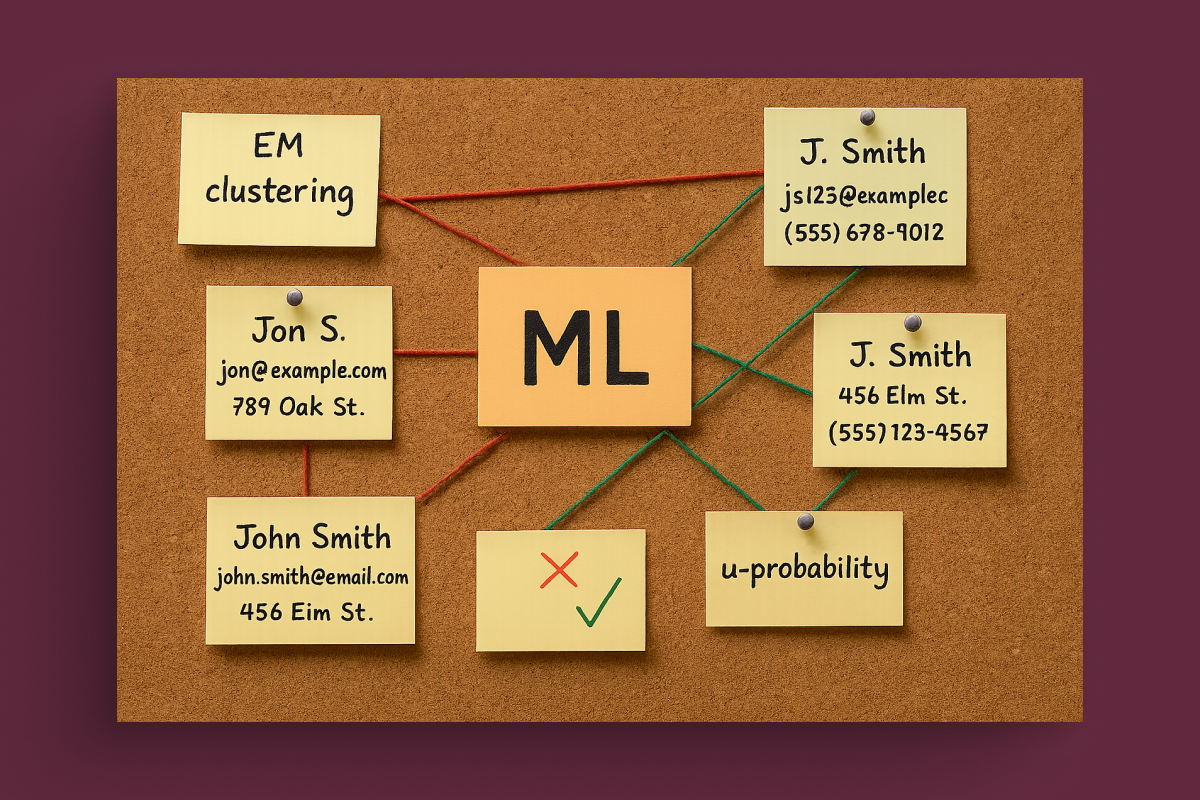

Every model prediction is compared to this truth and rendered into a strategic error map: green squares for correct values, red for mismatches, blue for missing data. The map becomes the planning board.

When everyone can literally see what is wrong and where, prioritization ceases to be opinion and turns into throughput.

What Schema-Guided Reasoning Is and Why It Outperforms Structured Output

Schema-Guided Reasoning is often confused with structured output. Structured output is the contract. SGR is the way you design that contract so the model can think reliably inside it.

Azati engineers the schema layout - the order of fields, their grouping, and cascades of intermediate computations, so that by the time the model reaches a specific field, the context immediately preceding it already contains the information needed to fill it correctly.

This is microprompting embedded into the response format, turning one call into dozens of deliberate, low-cognitive-load steps. Field names are meaningful, descriptions are concise where necessary, and cycles appear only when they improve clarity.

The result is fewer guesses, more verification, and accuracy gains you can measure on your error map.

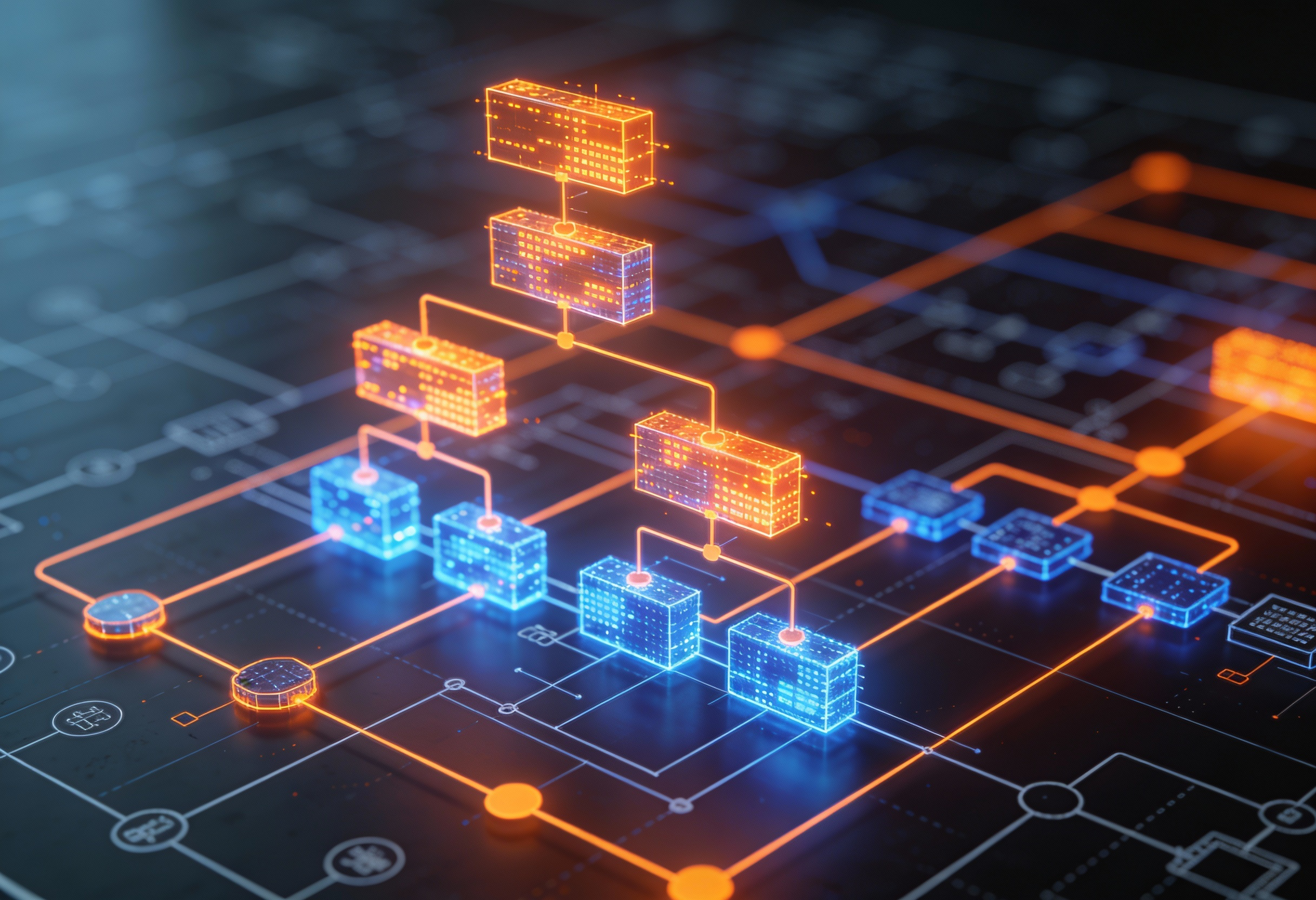

Inside the SGR Two-Prompt Architecture

Under the hood, Azati uses a two-prompt AI architecture that favors control over chaos.

- Prompt 1: Performs SGR analysis of the document, filling a strictly enforced response format with cascades and simple cycles to compute the right abstractions.

- Prompt 2: Acts as a constrained LLM agent that writes the exact function body required to extract or normalize values for the current configuration. It receives the analyzed document and produces executable code.

The pipeline runs the function, validates outputs, and if something fails, retries with traces and targeted edge cases added to the context.

This is not a sprawling agentic system. It is a set of rails that makes AI model behavior observable, improvements measurable, and results dependable.

In practice, the system can generate and cache hundreds of tiny tools automatically; nobody needs to read them, because the only metrics that matter are accuracy, speed, and cost.

From LLM Hallucinations to Reliable, Scalable Throughput

In a representative rescue, the starting point was familiar: no evaluations, code nobody could run locally, expensive model usage, and accuracy hovering around the point where stakeholders lose trust.

Once the ground truth and error map went live, the SGR analysis prompt alone delivered a strong uplift.

Most subsequent gains came from surgical edits to schema layout, such as reordering fields, renaming for clarity, and tightening cascades. The code-writing stage became simpler because the analysis precomputed what mattered, leaving the generated functions less room to hallucinate and more opportunity to verify.

The outcome was decisive.

On an adversarial benchmark curated by the client, LLM accuracy moved well beyond the required threshold. On the client’s own random checks, the results landed near perfect. Throughput shifted from sluggish, multi-day cycles to predictable, parallelizable execution.

Token spend dropped dramatically compared to the previous approach, even as evaluation coverage and confidence increased.

When new document sources arrived, the process handled them gracefully: the error map highlighted precisely which blocks failed, the schema revealed which cascades needed adjustment, and fresh edge cases flowed directly into ground truth.

Why SGR Delivers Measurable Wins in Real-World AI Pipelines

SGR aligns engineering with reality:

- Weak supervision gives you the right data fast by having experts annotate a small but adversarial set of examples.

- Domain-driven design keeps “correct” grounded in business meaning rather than abstract metrics.

- Role separation sustains momentum: the evaluation team’s mandate is to find and formalize failure, adding diverse, difficult examples that turn the map red where it hurts.

The SGR team’s mandate is to turn those squares green by improving cascades and tightening schemas. The strategic error map keeps everyone honest and makes prioritization self-evident.

Best Use Cases for Schema-Guided Reasoning

SGR shines wherever organizations must extract, normalize, and reason over heterogeneous, high-stakes documents: finance and regulatory filings, medical and industrial documentation, technical catalogs, contracts, and go-to-market analytics.

If your current AI document extraction pipeline is a fragile mix of scripts, brittle parsers, and manual checks, SGR replaces it with a measurable system that improves the moment new edge cases appear.

It also works with compact LLM models when the schema is optimized, proving that mastering the layout of reasoning matters as much as model size.

How Azati Implements SGR to Improve LLM Accuracy

We assess your pipeline and establish a shared ground truth. We implement the strategic error map and the SGR analysis layer as the first sources of truth. We add the tool-generation stage under constrained decoding and integrate outputs with your analytics stack.

Then we operationalize quality: as new edge cases appear, they flow into ground truth, the map lights up, and the SGR layer responds. The result is a durable process that consistently earns trust through its numbers.

If your LLM initiative is stuck in expensive loops, hallucinating on the details that matter, or drowning in microservices nobody wants to touch, Azati can help you turn it into a system that delivers measurable wins.

Tell us where it hurts. We will bring the schema, the rails, and the map.