Let’s face that humans hate routine, mundane tasks. That’s why we try to teach computers to perform as many tedious processes as possible instead of us. Data matching (or Record Linking) solution is a perfect example of such activities. And even though there are a lot of challenges for computers to execute this task, they still do it better than us, human beings that can get tired and become less attentive to details.

In this article, we uncover the main points of record linking: what it is; how it works, what tools are used, etc. If you are interested in data matching (or record linking) services – we commonly face such things, so feel free to contact us, and we’ll reach you as soon as possible.

What Is Record Linking?

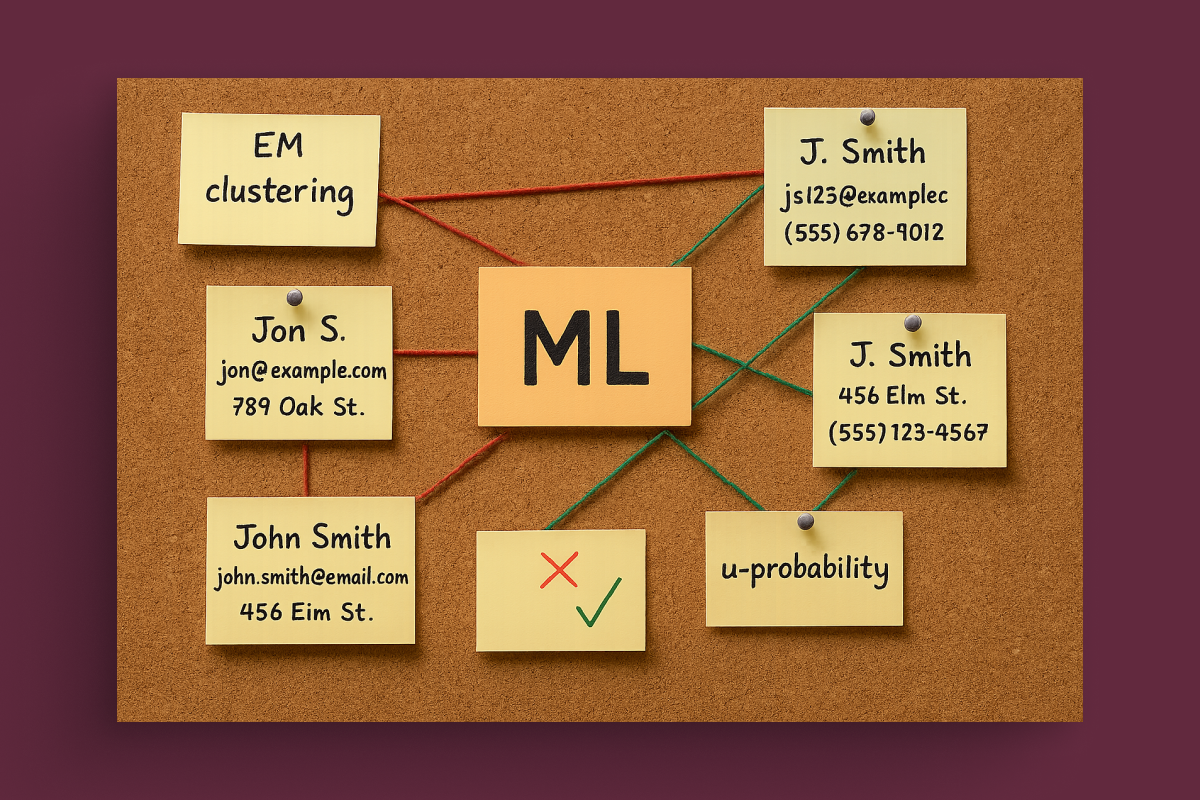

Data matching, or in other words record linking, is the process of finding the matching pieces of information in large sets of data. The purpose can be to find entries that are related to the same subject or to detect duplicates in the database. Although the task might seem simple for a human, there are quite many issues computers face.

First of all, algorithms rely on the quality of information a lot. Let’s take a database with names, addresses, and birthdays of people. If some dates are entered in the US format, and others – in the international format, a computer will struggle to compare the attributes. Thus, to make sure that algorithms do everything correctly, we first need to process the information and ensure a basic quality level. Otherwise, the system won’t be able to complete the task and offer accurate results.

Another complexity is that algorithms usually have to work with vast databases. The more records there are to compare to each other, the more time this process takes. Also, these difficult tasks will be quite expensive to execute. Sometimes this problem can be fixed with indexes that can remove the data that has no match. But it’s not always possible to do so.

Data Matching Techniques

There are several data linkage methods. They can be divided into two groups – deterministic and probabilistic. The first kind is used for data-rich projects that offer many high-quality identifiers, while the second one reflects the probability that particular data records fall under the same category or group.

The Deterministic Approach

We can apply wwo strategies here. The first strategy takes one step to compare all records at once using as many identifiers as you can equip the system with. The result is either positive or negative. The pair of records can agree on all identifiers and, therefore, become a match. Or, the pair can disagree on at least one ID becoming a non-match.

The second one requires multiple steps. The records we can compare in several rounds. Each round has less restrictive rules of comparison. The pair of records must agree on at least one identifier during one step to becoming a match. This approach takes more time than the first one, but it gives more valuable and precise results.

Still, the deterministic method has its downsides. For instance, it doesn’t take into consideration that particular criteria can be more discriminative than others. The probabilistic approach doesn’t ignore this fact and considers that even though two records disagree on specific identifiers, they might still be a match.

The Probabilistic Approach

Therefore, the probabilistic approach offers three kinds of results adding ‘possible matches’ to ‘not matching’ and ‘matching pairs’. Possible matches are based on the decision rules of the algorithm and the linkage score that sets a specific threshold.

To make sure that the probable matches are correct, algorithms use u-probabilities and m-probabilities. The u-probability shows the possibility that entries which don’t match can agree on a particular identifier unpredictably. For instance, two entries that contain names and birthdays can agree on the date. The m-probability, in turn, shows the possibility that seemingly non-matching entries can be matching. Taking the same name and birthday entries as an example, if the surname is rare, records can indeed be matching.

Thus, when two records are defined as a possible match, they go through the evaluation considering u and m probabilities. Such an approach takes into consideration typographical errors that can influence the results. Then, the researchers have more chances to receive positive results.

The choice of the approach depends on the specifics of the current situation and goals. If there is a lot of high-quality data, deterministic methods will be the best solution. If there are few direct identifiers, and the quality of the information is low overall, probabilistic methods will do a better job.

However, these guidelines are rather vague, and ultimately researchers have complete freedom in deciding which method to use. Each project has unique features, which means that researchers shouldn’t blindly use the approach that is theoretically sufficient. They should consider all the advantages and disadvantages of the two methods, the specifics of the project, budget, timeline, and other resources to make the right decision.

Existing Software Applications For Record Linking

There is quite a vast choice of data matching software. Each product has different features and is applicable in certain cases. For example, the tool can have supervised, unsupervised or active learning; offer API and GUI, or not. Also, many instruments offer reduplication which can be rather useful in many projects.

There are three groups of classification algorithms:

- supervised learning

- unsupervised learning

- active learning

Supervised Learning

We have to apply supervised learning when there is training data. This kind of data has to offer the true match status for each comparison indicator. If you have plenty of information, the logistic regression classifier is applicable. It is one of the oldest approaches to supervised learning, yet effective if you have enough training data for it. The logistic regression classifier is related to deterministic methods of data matching. Each criterion has its weight that determines if the match is truly right.

For the probabilistic approach, there is a Naive Bayes classifier. It reflects the probability of a specific situation happening given particular circumstances. The prediction is made with the consideration that features do not affect each other. That’s why it is called naive.

There are three types of Naive Bayes classifiers. The multinomial one is usually applied to classify the documents. It evaluates the frequency of certain words and determines the category to which the document belongs. The Bernoulli classifier is similar with a single difference – it has only “yes” and “no” values. For instance, it detects if there is a particular word in the text. The Gaussian type allows working with continuous data.

Unsupervised Learning

Unsupervised learning need when there is no training data. Then, specialists usually use two main methods – K-means clustering and Expectation-Maximization algorithm. Unsupervised learning requires more time because algorithms involve iteration.

We can apply K-means clustering in big data. The goal of K-means is to assemble similar data into groups and find the underlying patterns. Therefore, the algorithm looks for a certain number (K) of clusters in the provided database.

We apply the Expectation-Maximization algorithm to find maximum likelihood criteria of the statistical model if specialists can't match the data directly. Usually, this algorithm adds some latent variables that allow working with unobserved data.

Active Learning

The third approach, active learning, involves an algorithm that chooses the set of examples which will have labels in contrary to the methods discussed above. Supervised and unsupervised learning requires the user to pick the labeled examples. But identifying which set of samples will be sufficient is a tough task. Since the algorithm performs this process instead of the user, it reduces the costs of the project and accelerates it significantly. It is a relatively new and more advanced approach that become more frequently now.

Conclusion

We live in a world of big data. All the industries from complex ones like pharmaceuticals and biotech to less sophisticated sectors like e-commerce have a lot of information to work with. It is incredibly inefficient to try processing all this data manually. The process will take a lot of time, human labor and, of course, costs.

That’s why businesses all over the globe start implementing data matching service in their workflows. Computers can process information better and faster than humans — especially, given that the machine learning industry evolves continuously and quickly. The technology gets more advanced every day offering companies better solutions.

Considering that the volume of data will continue increasing significantly, businesses that still don’t use data matching technology should consider trying it. Algorithms will help to structure all the information, as well as provide the business owner with loads of valuable insights. So in the end, the entrepreneur gets another advantage from using data matching – the opportunity to spot tendencies and make data-driven decisions. Thus, there’s little to no chance of making a mistake and leading the company in the wrong direction.

Contact our experts today to implement intelligent record linking tailored to your business needs. Let machine learning do the heavy lifting—boost accuracy, save time, and unlock smarter decisions now!