All Technologies Used

Motivation

The customer, a US national leader in healthcare insurance, claims, and patient safety solutions, faced issues with inconsistent and incomplete reference data from multiple operational systems, which caused reporting errors, redundant records, and delays in critical decision-making. Their goal was to ensure that the ETL process delivers the most complete, accurate, and consistent data, prevents overwriting by less reliable sources, eliminates duplicates, and maintains high performance, enabling reliable analytics, reporting, and operational workflows while improving scalability and efficiency.

Main Challenges

The ETL process received data from multiple operational systems, some of which provided incomplete or less detailed information. This led to inconsistencies in the data warehouse, causing reporting errors, unreliable analytics, and potential misinformed decisions.

More detailed attribute values from reliable sources were sometimes overwritten by empty or less complete values from other systems, creating duplicate or redundant records. This reduced the overall quality, integrity, and trustworthiness of the data.

The original ETL process had a long runtime, exceeding 30 minutes, which delayed reporting and analytics. Optimizing the process for faster execution without compromising data quality was essential for timely operational insights.

The system needed to easily adapt to new data sources and changing priority rules for attributes. Without a scalable and flexible solution, any modification in data handling could disrupt ETL workflows or require extensive manual intervention.

Our Approach

Want a similar solution?

Just tell us about your project and we'll get back to you with a free consultation.

Schedule a callSolution

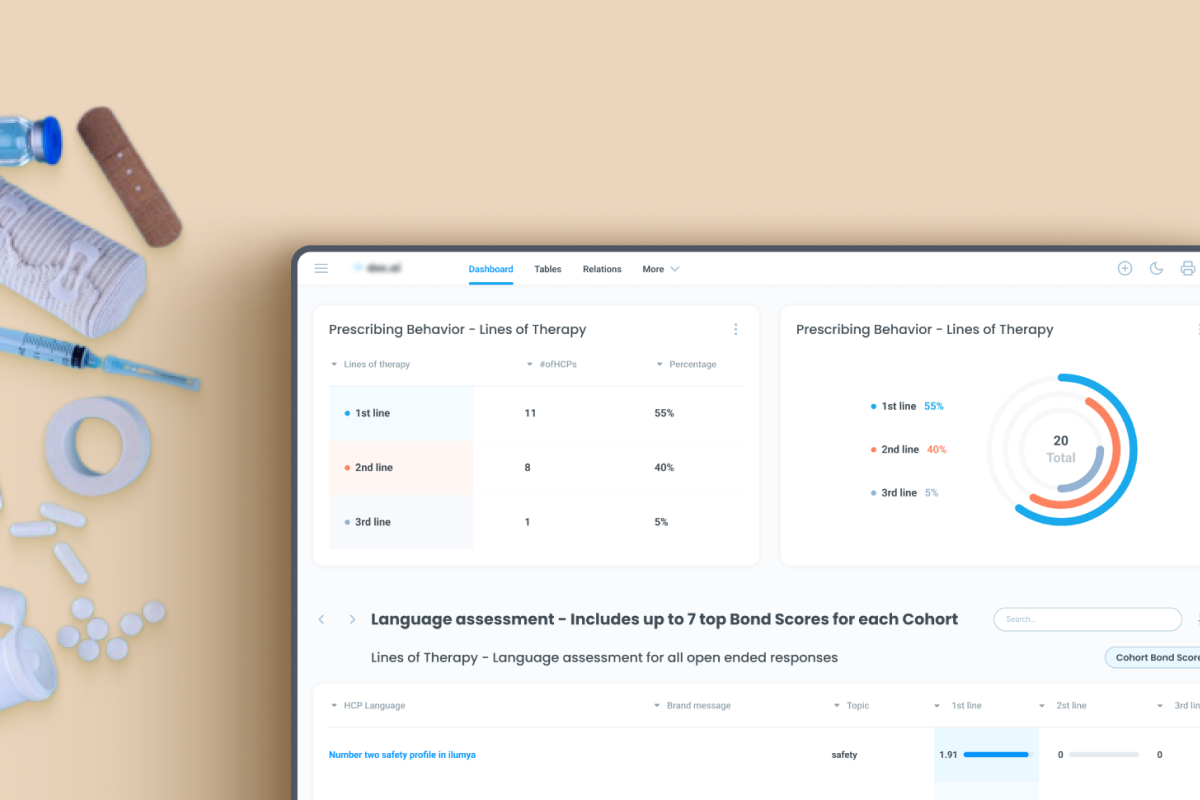

Survivorship Matrix

- Rule-based decision making for attribute retention

- Integration directly into ETL SQL logic

- Supports multi-source data aggregation

- Reduces errors from incomplete or inconsistent data

Data Deduplication

- Eliminates duplicate and redundant records

- Preserves high-priority, accurate data

- Maintains historical data integrity

- Reduces manual data cleansing efforts

Attribute Prioritization

- Customizable priority rules for each attribute

- Dynamic handling of new data sources

- Supports future scalability

- Prevents loss of critical data

Performance Optimization

- Reduced ETL runtime from 30+ minutes to under 5 minutes

- Supports high-volume healthcare datasets

- Efficient processing without data loss

- Enables timely reporting and analytics

Business Value

Improved Data Quality: Ensured the most complete, accurate, and reliable data is loaded into the data warehouse for analytics and reporting.

Enhanced Flexibility: Attribute priority rules can be adjusted easily, supporting scalable and adaptable ETL processes.

Optimized Performance: Reduced ETL runtime by over 80%, significantly improving operational efficiency and responsiveness.

Reduced Redundancy: Eliminated duplicate and conflicting records, improving data integrity for downstream applications.