All Technologies Used

Motivation

The customer, a US leader in patient safety, healthcare insurance, claims, and risk solutions, faced severe performance issues when extracting large volumes of financial data from their general ledger. Inefficient SQL execution, invalid system statistics, and overloaded memory caused extract jobs to run for hours, slowing analytics, reporting, and operational workflows. The objective was to identify the root causes, improve query efficiency, optimize system statistics, and reduce extraction time to support timely decision-making and high system availability.

Main Challenges

Large datasets triggered memory overload, inefficient execution paths, and slow-running queries. As data volume grew, extraction times increased dramatically, impacting downstream analytics and general ledger reconciliation.

System statistics did not reflect actual performance conditions, causing the optimizer to choose suboptimal execution plans and perform unnecessary full table scans instead of using available indexes.

Due to outdated statistics and poor query structure, indexes were not used effectively. This resulted in long-running operations, excessive I/O, and increased CPU consumption during extraction.

The database served both transactional (OLTP) and batch (DSS) processing, requiring a flexible statistics collection strategy to maintain optimal performance under varying load patterns.

Our Approach

Want a similar solution?

Just tell us about your project and we'll get back to you with a free consultation.

Schedule a callSolution

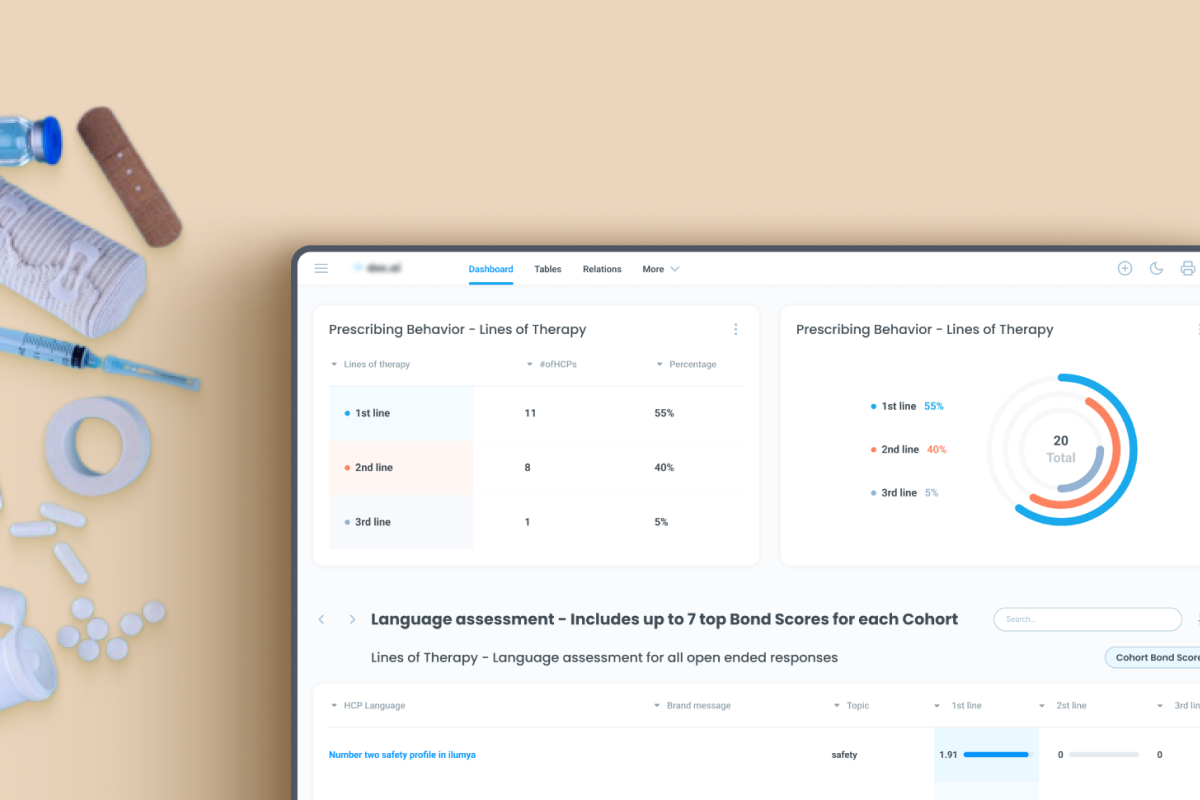

Performance Monitoring & Diagnostics

- Continuous performance tracking

- Detection of inefficient execution plans

- Identification of memory and I/O bottlenecks

- SQL Trace-based diagnostics

Root Cause Analysis Engine

- Deep analysis of query behavior

- Identification of invalid or outdated system statistics

- Detection of suboptimal index usage

- Automated generation of tuning recommendations

Statistics Optimization Framework

- Time-based statistics collection (day, night, 24-hour)

- Balancing OLTP vs DSS workloads

- Index utilization improvement

- Publishing optimized statistics for query performance

Query & System Performance Tuning

- Reduction of extract runtime from 3 hours to 10-15 minutes

- Improved memory utilization

- Elimination of redundant full table scans

- Acceleration of high-volume data processing

Business Value

Significant Performance Boost: Extract process runtime reduced from 3 hours to just 10-15 minutes, accelerating financial reporting and operational workflows.

Reduced I/O Load: Total direct path read events decreased by 50%, lowering system stress and improving stability.

Higher System Efficiency: Optimized statistics and query execution increased throughput and reduced resource usage across the system.