Motivation

To address manual decision bottlenecks, inconsistent underwriting outcomes, and time-consuming processing by leveraging historical data and machine learning, enabling faster, more accurate, and consistent policy application decisions while freeing underwriters to focus on complex or high-value cases.

Main Challenges

Manual decision-making caused delays and bottlenecks in the underwriting process, preventing efficient handling of policy applications. Azati addressed this by automating the decision-making process, reducing the need for manual intervention and speeding up policy application processing.

The underwriting process was time-consuming due to repetitive tasks, making it difficult to quickly evaluate and process applications. Azati developed an automated system that analyzes policy applications and provides recommendations, significantly reducing processing time.

Human factors led to inconsistent decisions, affecting the quality of underwriting decisions and increasing the risk of errors. Azati solved this by using machine learning to analyze historical data and provide data-driven decision recommendations, ensuring consistency and accuracy in the process.

There was limited use of historical data, which hindered the potential to make data-driven decisions and improve the accuracy of policy assessments. Azati leveraged historical underwriting data to train the machine learning model, enhancing the decision-making process with predictive insights from past applications.

Our Approach

Want a similar solution?

Just tell us about your project and we'll get back to you with a free consultation.

Schedule a callSolution

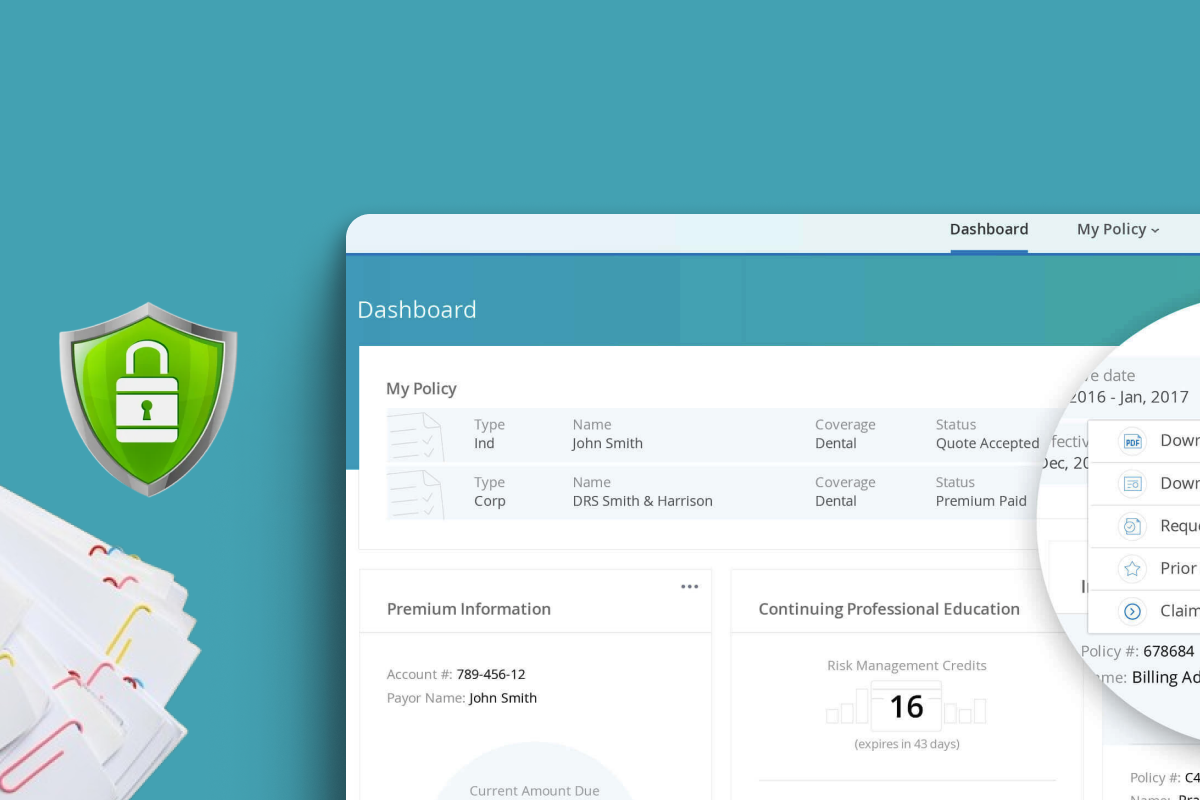

Automated Decision Engine

- Automatic classification of applications into green, yellow, and orange zones

- Prediction of approval or decline probability

- High recall for declined applications to minimize risk

- Stratified 10-fold cross-validation for model validation

Historical Data Integration

- Training dataset with over 140k applications and 1000+ features

- Recursive feature elimination to optimize model performance

- Integration of prior policy outcomes and claims data

- Enhanced predictive scoring for new applications

Configurable Decision Thresholds

- Green zone for auto-approval

- Yellow zone for manual review

- Orange zone for high probability of decline

- Thresholds adjustable by line of business or risk tolerance

Statistical Performance Analysis

- Panel showing historical accuracy of similar decisions

- Measurement of algorithm performance using ROC AUC

- Supports continuous improvement of predictive models

- Facilitates informed operational adjustments

Hybrid API and Architecture

- Flexible data access and integration

- Scalable microservices architecture

- Support for high-volume application processing

- Seamless interaction with internal insurance systems

Business Value

Higher Throughput: Straight-through processing increased by 45%, allowing faster application approvals without human intervention.

Improved Underwriter Efficiency: Underwriters focus on high-value, complex cases, freeing time from routine applications.

Increased Processing Capacity: System processed 2.5 times more applications over the same period.

Consistent and Data-Driven Decisions: Machine learning-based recommendations reduce errors and variability in underwriting.

Flexible Risk Management: Configurable thresholds allow the insurance company to balance volume and risk according to business needs.