Every executive implementing generative AI faces the same paradox: move fast enough to capture competitive advantage, but not so fast that you create catastrophic risk exposure. The tension between speed and control isn't just philosophical, it's operational, immediate, and increasingly consequential.

According to Cisco's 2026 Data and Privacy Benchmark Study, 93% of organizations are planning further governance investments to keep pace with AI complexity and stakeholder expectations. Yet the same research reveals that governance practices are still evolving, with many organizations struggling to establish frameworks that enable innovation rather than stifle it.

This article provides a practical framework for generative AI governance that balances these competing demands, enabling executives to implement enterprise AI governance frameworks that accelerate strategic initiatives while managing risk appropriately.

Why Traditional Governance Models Fail for Generative AI

Most organizations default to governance approaches designed for previous technology waves. These frameworks assume stable systems, predictable outputs, and clear boundaries between development and deployment. Generative AI breaks all these assumptions.

The Unique Governance Challenges of GenAI

| Traditional IT Systems | Generative AI Systems | Governance Implication |

|---|---|---|

| Deterministic outputs | Probabilistic, variable outputs | Cannot pre-approve all responses |

| Clear development/production boundary | Continuous learning and adaptation | Ongoing monitoring required |

| Defined capability scope | Emergent capabilities and behaviors | Risk assessments must be dynamic |

| Limited data processing | Process sensitive data at scale | Privacy implications are exponential |

| Vendor accountability clear | Shared responsibility unclear | Accountability requires explicit definition |

The failure mode isn't usually dramatic policy violations. It's paralysis. Organizations implement governance so restrictive that strategic GenAI initiatives stall, while competitors with more sophisticated generative AI risk management approaches capture market advantage.

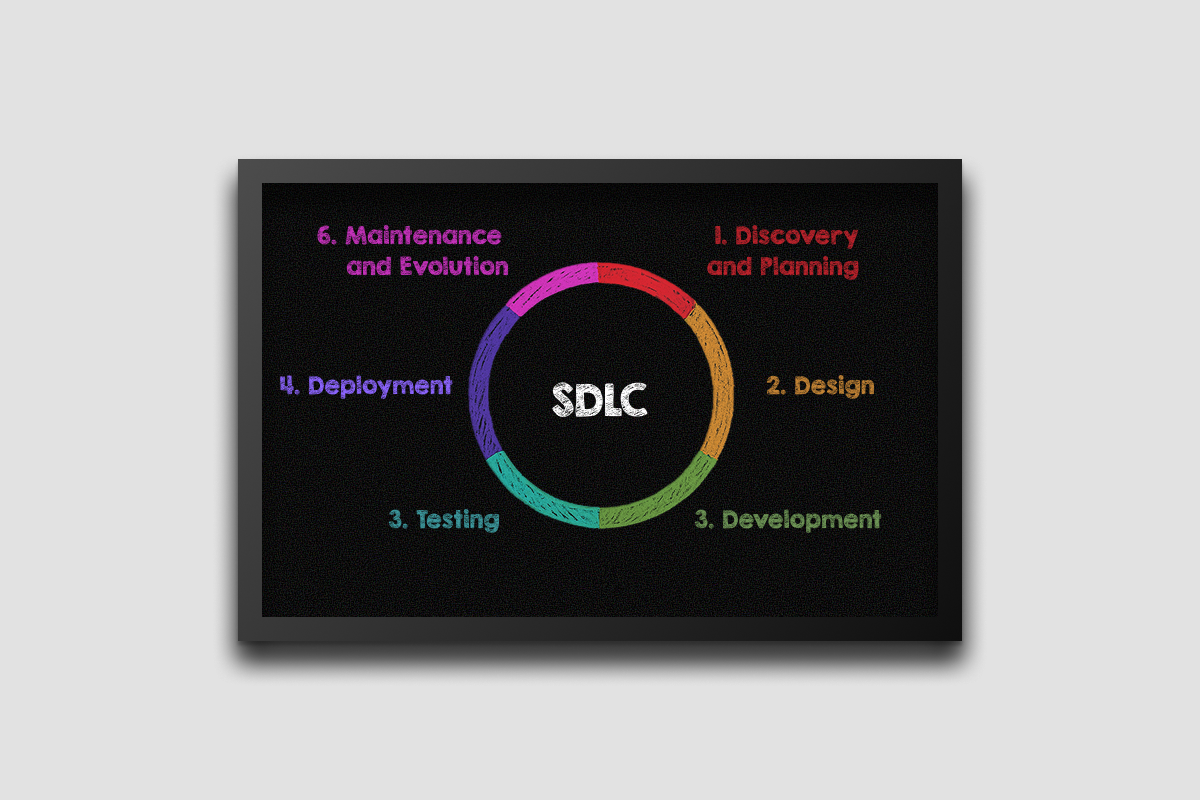

The Three-Layer Enterprise AI Governance Framework

Effective enterprise AI governance framework design requires thinking in layers, each with different objectives, stakeholders, and decision rights. This approach, refined through Azati's work with hundreds of regulated enterprises, enables both speed and control.

Strategic Layer

- Purpose: Set direction and boundaries;

- Stakeholders: C-suite, board, legal;

- Decisions: Risk appetite, compliance requirements, ethical principles;

- Review Cadence: Quarterly.

Operational Layer

- Purpose: Enable rapid, safe implementation;

- Stakeholders: AI teams, product, compliance;

- Decisions: Tool approvals, data usage, deployment gates;

- Review Cadence: Weekly/monthly.

Technical Layer

- Purpose: Implement controls and monitoring;

- Stakeholders: Engineers, data scientists, security;

- Decisions: Model evaluation, testing protocols, incident response;

- Review Cadence: Continuous.

This layered approach creates clear separation between strategic governance decisions (which should move slowly and deliberately) and operational/technical decisions (which must move quickly to enable innovation).

The Risk-Based Governance Model: Matching Controls to Consequences

Perhaps the most important insight in modern generative AI governance is that not all AI applications require identical oversight. A customer service chatbot and an automated loan approval system present fundamentally different risk profiles and demand different governance approaches.

Four-Tier Risk Classification Framework

Critical Risk

- Examples: Automated decisions affecting legal rights, safety-critical systems, regulated compliance functions.

- Governance: Board-level approval, continuous human oversight, comprehensive audit trails, regular third-party validation.

High Risk

- Examples: Customer-facing decisions, employee management, financial recommendations, sensitive data processing.

- Governance: Executive approval, human-in-the-loop for edge cases, quarterly reviews, bias testing.

Moderate Risk

- Examples: Internal productivity tools, content generation, data analysis, customer support assistance.

- Governance: Department-level approval, spot-check monitoring, basic output validation, incident reporting.

Low Risk

- Examples: Code completion, document summarization, meeting transcription, idea generation.

- Governance: Self-service with usage guidelines, automated monitoring, lightweight review processes.

This risk-tiered approach, aligned with emerging regulatory frameworks like the EU AI Act, enables organizations to move quickly on low-risk applications while maintaining appropriate oversight for high-stakes deployments.

Key Principle: Proportional Governance

- Match governance intensity to actual risk, not perceived complexity;

- Low-risk applications should have streamlined approval processes;

- High-risk applications demand comprehensive oversight regardless of development speed;

- Risk classification must consider: potential harm, regulatory requirements, data sensitivity, automation level, and reversibility of decisions.

Human-in-the-Loop Governance: When and How

One of the most debated aspects of AI governance for executives is determining where human oversight is required. The concept of human in the loop governance sounds straightforward but implementation reveals complex trade-offs.

The Three Models of Human-AI Decision-Making

| Model | AI Role | Human Role | Appropriate For |

|---|---|---|---|

| Human-in-the-Loop | Recommends action | Reviews and approves every decision | High-stakes individual decisions (loans, hiring, medical) |

| Human-on-the-Loop | Makes decisions autonomously | Monitors for anomalies, can intervene | High-volume routine decisions with exception handling |

| Human-out-of-the-Loop | Operates autonomously | Periodic review of aggregate outcomes | Low-stakes productivity applications |

Best Practices for Effective Human Oversight:

- Provide context, not just recommendations: Show AI reasoning, confidence levels, and alternative options;

- Design for disagreement: Make it easy for humans to override AI without additional effort;

- Monitor override patterns: Track when humans disagree with AI to identify model limitations;

- Rotate reviewers: Prevent individual automation bias from becoming systematic;

- Audit the auditors: Periodically verify that human oversight maintains quality.

Managing Generative AI Risk: The Five Critical Domains

Comprehensive managing generative AI risk requires systematic attention across five interconnected domains. Organizations that address only one or two typically discover their governance gaps through costly incidents.

1. Data Privacy and Security Risk

GenAI systems process vast amounts of data, often including sensitive information. According to World Economic Forum guidance, privacy protection must be built into system design, not added afterwards.

Key Controls:

- Data classification system defining what information can be processed by which AI systems;

- Encryption and access controls for training data and model inputs/outputs;

- Clear policies on sending proprietary data to commercial AI APIs;

- Regular audits of data handling practices and vendor compliance.

2. Model Performance and Reliability Risk

GenAI outputs are probabilistic, not deterministic. Systems can fail in subtle ways that traditional testing doesn't catch.

Key Controls:

- Continuous monitoring of output quality, not just pre-deployment testing;

- Drift detection to identify when model performance degrades;

- Adversarial testing to find failure modes before users do;

- Fallback mechanisms when AI confidence drops below thresholds.

3. Bias, Fairness, and Ethical Risk

AI systems can perpetuate or amplify biases present in training data or embed in unintended ways through optimization processes.

Key Controls:

- Pre-deployment bias testing across protected characteristics and use cases;

- Ongoing monitoring of outcomes across demographic groups;

- Clear escalation process when bias is detected;

- Regular fairness audits by independent teams.

4. Regulatory and Compliance Risk

The regulatory landscape for AI is evolving rapidly. Organizations must prepare for generative AI regulatory readiness even before rules are finalized.

Key Controls:

- Maintain comprehensive AI system inventory with deployment contexts;

- Document decision-making processes and AI involvement;

- Establish explainability mechanisms for regulatory inquiries;

- Monitor regulatory developments across operating jurisdictions.

5. Vendor and Third-Party Risk

Most organizations use commercial AI services, creating dependencies and exposures beyond their direct control.

Key Controls:

- Vendor due diligence including security, compliance, and service reliability;

- Contractual provisions for audit rights, data handling, and incident notification;

- Multi-vendor strategies to avoid lock-in and single points of failure;

- Regular vendor risk reassessment as services and usage evolve.

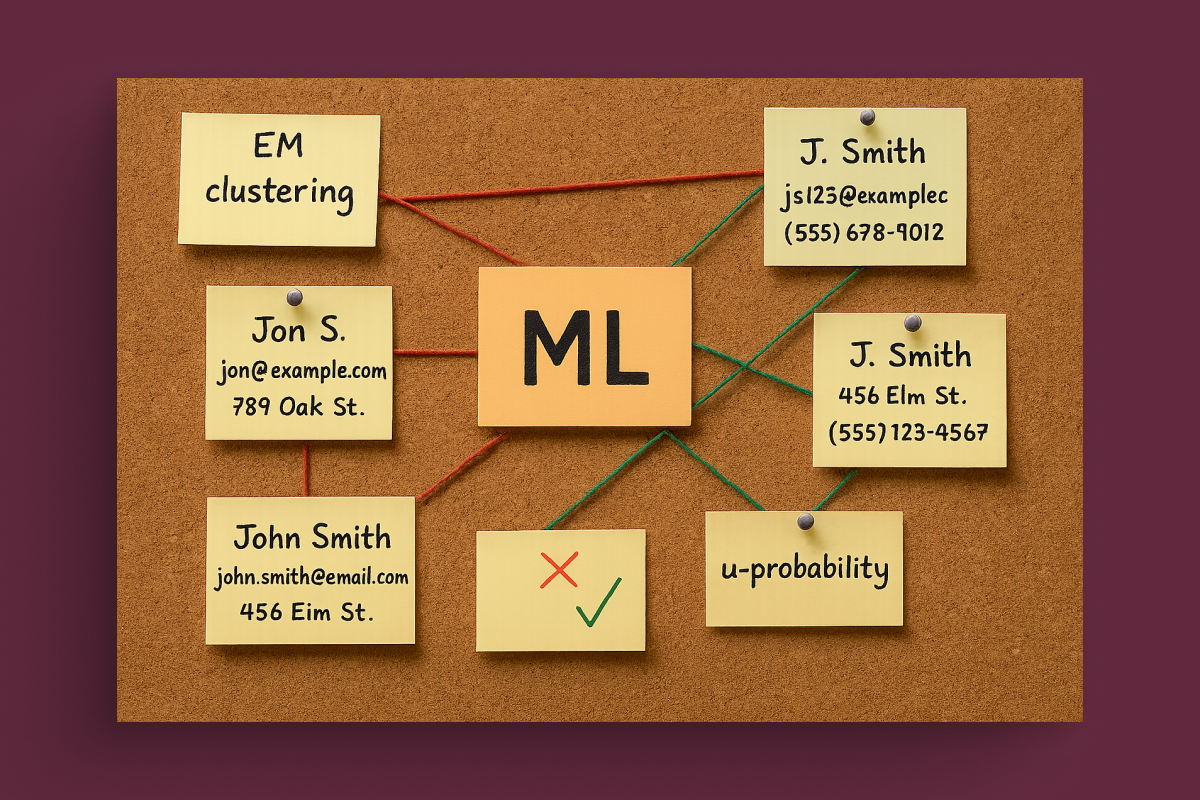

AI Policy vs AI Governance: Understanding the Distinction

Executives often conflate AI policy vs AI governance, treating them as interchangeable. This confusion leads to governance failures when organizations mistake having policies for having governance.

| AI Policy | AI Governance |

|---|---|

| States what should happen | Ensures it actually happens |

| Written documents and principles | Processes, tools, and accountability structures |

| "We will not use AI for X" | Technical controls preventing X + monitoring + enforcement |

| One-time creation activity | Ongoing operational practice |

| Demonstrates intent | Demonstrates capability and follow-through |

Essential Elements of Effective AI Governance (Beyond Policy):

- Clear ownership: Named individuals accountable for governance outcomes;

- Decision rights: Explicit authority to approve, reject, or pause AI initiatives;

- Operational processes: Workflows embedding governance into daily activities;

- Technical controls: Systems enforcing policies automatically where possible;

- Monitoring and reporting: Visibility into actual AI usage and compliance;

- Continuous improvement: Mechanisms to update governance as AI and risks evolve.

AI Compliance Enterprise: Building for Regulatory Readiness

With AI regulations proliferating globally, AI compliance enterprise capabilities are transitioning from nice-to-have to business-critical. The organizations navigating this landscape successfully share common characteristics.

The Compliance Preparedness Framework

Four Pillars of Regulatory Readiness

- 1. Comprehensive AI Inventory: Maintain detailed registry of all AI systems including: purpose, owner, deployment context, data sources, affected populations, decision authority, risk classification, and vendor dependencies.

- 2. Documented Development Process: Capture evidence of requirements and specifications, data sources and quality validation, model selection rationale, testing and validation results, bias and fairness assessments, approval decisions and authority.

- 3. Ongoing Monitoring and Reporting: Track operational metrics: performance indicators, drift detection, incident reports, override patterns, user feedback, compliance violations.

- 4. Governance Proof Points: Demonstrate operational governance through: meeting records and decisions, training completion rates, audit results, policy violations and remediation, risk assessment updates.

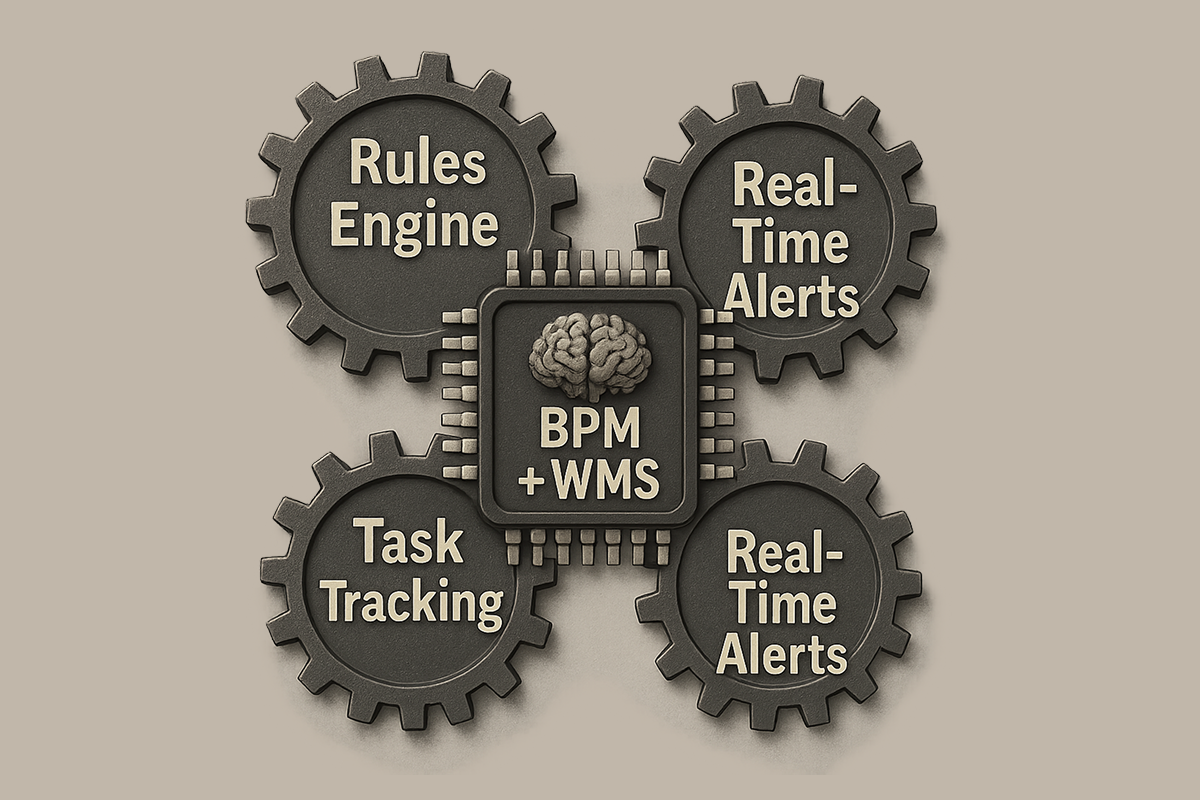

The Azati Approach: Governance That Enables, Not Restricts

Through 22 years implementing AI systems for regulated enterprises, Azati has developed governance approaches that balance executive priorities: accelerating strategic initiatives while managing risk appropriately and maintaining regulatory compliance.

Why Leading Organizations Partner with Azati for AI Governance

- Practical Framework Design: Governance structures tested across 400+ AI implementations in highly regulated industries;

- Regulatory Expertise: Deep experience with GDPR, HIPAA, SOC2, financial services regulations across jurisdictions;

- Technical Implementation: Not just policy recommendations, actual controls, monitoring systems, and audit capabilities;

- Industry-Specific Adaptation: Governance frameworks customized for fintech, healthcare, manufacturing compliance requirements;

- Rapid Deployment: 48-hour team assembly to establish governance for time-sensitive AI initiatives;

- Full-Stack Integration: Governance embedded in development workflows, not bolted on afterwards;

- Continuous Evolution: Governance that adapts as AI capabilities, regulations, and organizational needs change.

Conclusion: Governance as Competitive Advantage

The paradox of AI governance is that done poorly, it becomes a strategic liability, either exposing organizations to unacceptable risks or preventing them from capturing AI's competitive potential. But done well, generative AI governance becomes a competitive advantage itself.

Organizations with mature governance can:

- Move faster on strategic AI initiatives because risk management is systematic, not ad-hoc;

- Win enterprise customers and regulated markets that demand proven governance;

- Attract and retain talent who want to work on AI responsibly;

- Adapt quickly to regulatory changes because foundations are already established;

- Scale AI deployments without proportional increases in risk exposure.

The window for establishing governance leadership is narrowing. Regulations are accelerating, stakeholder expectations are rising, and competitors with mature frameworks are capturing advantage. Organizations that treat governance as an afterthought will discover that catching up requires not just implementing policies, but rebuilding AI systems designed without governance foundations.

The question isn't whether to invest in enterprise AI governance framework development, it's whether you're building governance that enables your strategy or restricts it.

The Governance Imperative for 2026

- Treat governance as strategic capability, not compliance burden;

- Match governance intensity to actual risk through tiered frameworks;

- Embed controls in workflows rather than creating separate review processes;

- Build for regulatory readiness before requirements are finalized;

- Partner with teams who've built governance for regulated enterprises at scale;

- Evolve governance continuously as AI capabilities and risks develop.