Black Friday 2024 broke records again: $11.8 billion in online sales, shoppers spending $5.1 million per minute at peak, and mobile devices driving 79% of traffic. But here's what those headlines don't tell you: behind every successful holiday shopping experience stood sophisticated AI systems preventing catastrophic failures that would've cost retailers millions.

When European retailers hit 11.4 orders per second on Black Friday, a 205% jump over normal period, traditional infrastructure crumbles. Static capacity planning can't predict when toy sales will spike 680% on Cyber Monday. Manual load management can't react fast enough when traffic surges from "normal Tuesday" to "system-melting chaos" in minutes. That's where AI transforms infrastructure from barely surviving to elegantly scaling.

Why Traditional Auto-Scaling Fails During Holiday Peaks

Let's talk about why your current infrastructure probably can't handle real holiday traffic, even if you think it can. Traditional auto-scaling operates on reactive principles — wait until metrics cross thresholds, then spin up resources. That approach works fine for gradual traffic increases but fails spectacularly during holiday surges.

The Fatal Flaws of Reactive Scaling

When Black Friday hits and traffic jumps 205% in minutes, reactive auto-scaling creates what engineers call the "thundering herd problem." Your monitoring detects high CPU or memory usage, triggers scaling, but by the time new instances boot (typically 2-5 minutes for cloud servers), you've already lost thousands of customers to timeout errors.

Even worse? The scaling triggers happen simultaneously across your infrastructure, overwhelming orchestration systems and cloud APIs. I've seen scenarios where the auto-scaling system itself becomes the bottleneck, unable to provision resources fast enough because it's processing thousands of scaling events simultaneously.

The Cold Start Catastrophe

Here's what actually happens during a holiday surge with traditional scaling: your application servers start scaling, but they need 90 seconds to fully initialize. Your database connections pool out because the new servers all try connecting at once. Your CDN hasn't pre-cached the suddenly popular products. Your load balancer starts routing traffic to servers that aren't ready, creating cascading failures.

By the time everything stabilizes (if it does), you've experienced 5-10 minutes of degraded performance or outages during the absolute peak revenue period of your year. Congratulations, you just lost more money than your entire infrastructure budget.

AI-Based Anomaly Detection for Holiday User Behavior Shifts

AI anomaly detection changes the game entirely because it doesn't wait for problems, it predicts them. Machine learning models analyze historical patterns from previous holiday seasons, current trends, and real-time signals to forecast exactly when and where traffic will surge.

How AI Spots Holiday Patterns Humans Miss

During Thanksgiving 2024, up to 30% of holiday traffic is bot-driven: inventory checkers, price scrapers, and scalper bots all adding load without generating revenue. AI anomaly detection distinguishes bot patterns from human behavior, enabling selective rate limiting that protects infrastructure while allowing real customers through. This alone can reduce infrastructure needs by 20-30% during peaks.

Here's what AI anomaly detection catches that rule-based monitoring misses:

- Temporal patterns: Traffic doesn't just increase, it shifts timing. Mobile shopping peaks during commutes and lunch breaks on weekdays but dominates all hours during holidays;

- Product category cascades: When one category (like toys) spikes 680%, related categories (gift wrap, shipping services) surge 20 minutes later as customers complete their purchases;

- Geographic waves: Traffic rolls across time zones predictably during holidays. AI anticipates the wave and pre-scales regional infrastructure;

- User behavior changes: Holiday shoppers browse longer, add more items to carts, and create more database queries per transaction than normal users.

By analyzing user behavior in real time, rising cart sizes, longer sessions, increasing add-to-cart rates, AI can predict purchase waves 5–15 minutes before they hit checkout systems. That lead time is often the difference between stability and outage.

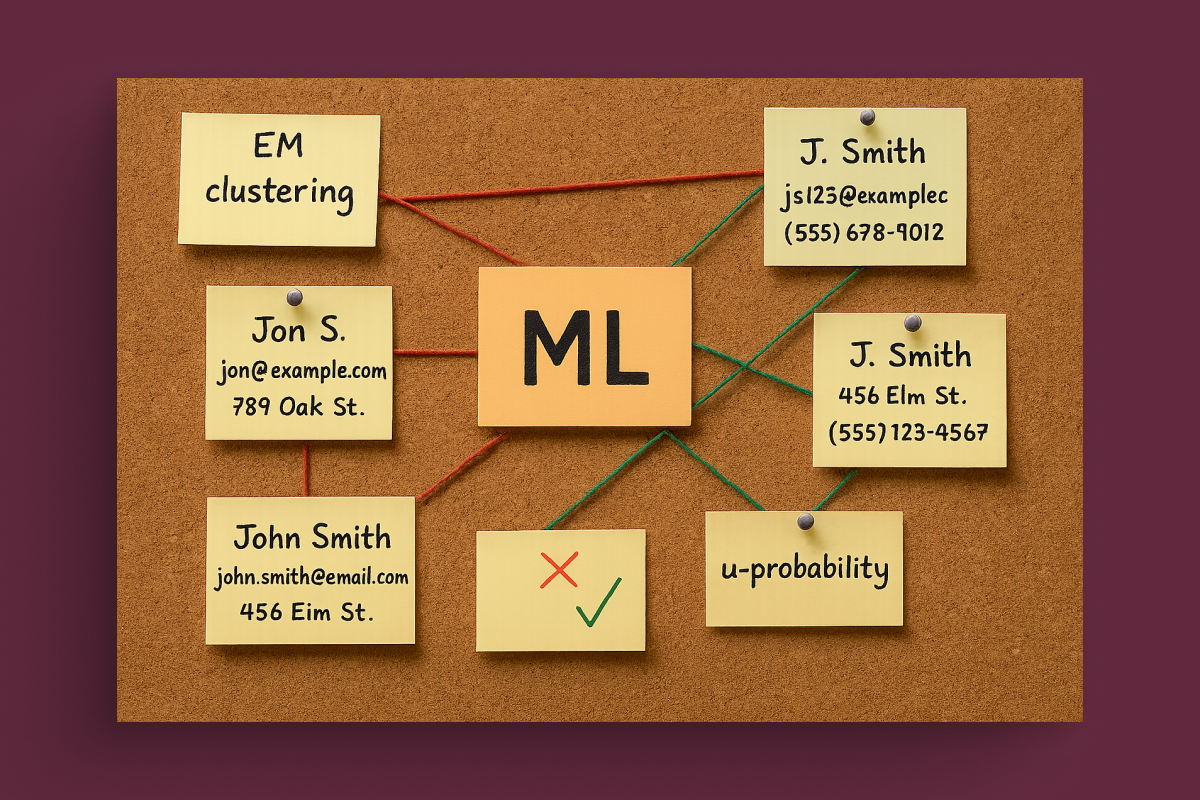

ML-Driven Capacity Planning for E-commerce, Fintech, Travel

Capacity planning software powered by machine learning transforms how organizations prepare for seasonal demand. Instead of guessing "we'll need 3x normal capacity during Black Friday," AI models precisely forecast demand patterns across different services, regions, and times.

Industry-Specific Patterns

Different industries experience wildly different holiday patterns that ML-driven capacity planning accounts for:

| Industry | Peak Characteristics | AI Planning Approach |

|---|---|---|

| E-Commerce | Sharp spikes on specific days, 205% traffic increases, mobile-first | E-commerce peak load architecture with predictive product popularity modeling, dynamic CDN allocation |

| Fintech | End-of-year transactions, tax deadline surges, payment processing spikes | Fintech seasonal demand optimization focusing on transaction throughput and fraud detection scaling |

| Travel | Booking windows 6-12 weeks before holidays, cancellation/rebooking waves | Predictive booking pattern analysis, dynamic inventory system scaling |

| Streaming Media | Simultaneous viewing during major events, bandwidth spikes | Content popularity prediction, edge caching optimization |

| Food Delivery | Meal time surges, weather-dependent ordering | Geographic demand forecasting, driver allocation optimization |

Modern systems use a three-layer forecasting model:

- Macro trends: year-over-year growth, economic signals, marketing schedules;

- Micro patterns: time-of-day effects, device mix, weather, sentiment;

- Real-time adjustments: minute-by-minute corrections based on actual traffic.

LLM-Powered Agents Adjusting Infrastructure in Real-Time

This is where things get seriously futuristic, and it's happening right now. LLM-powered agents don't just monitor and alert; they autonomously adjust CDN configurations, load balancer rules, and database parameters in real-time based on observed conditions.

How Autonomous Infrastructure Agents Work

Imagine an AI agent that understands your entire infrastructure stack and can reason about trade-offs. When traffic surges, it doesn't just scale everything, it makes intelligent decisions:

Intelligent CDN Orchestration

LLM agents analyze which products are trending, pre-cache them across edge locations geographically close to surge traffic, adjust cache TTLs dynamically, and even modify image compression based on detected network conditions. During Black Friday, this prevents origin server overload by serving 95%+ of requests from edge cache.

Smart Load Balancer Configuration

Instead of simple round-robin distribution, AI agents implement sophisticated routing, directing traffic based on instance health, current load, response time patterns, and even detected user session characteristics. Premium customers get routed to faster instances; browse-only traffic goes to cheaper ones.

Database Performance Tuning

Agents monitor query patterns and autonomously adjust database configurations, modifying connection pools, adjusting cache sizes, creating temporary indexes for popular queries, and shifting read traffic to replicas. All without human intervention, faster than any DBA could react.

Traffic Shaping & Prioritization

When systems approach limits, AI agents implement intelligent degradation, slowing non-essential features while keeping checkout flowing, deferring recommendation calculations, and simplifying search results. Users barely notice, but system stays stable.

The Decision-Making Process

What makes LLM-powered agents different from traditional automation is their reasoning capability. They don't just execute if-then rules; they understand context and make nuanced decisions.

For example, during a traffic surge, a traditional system might blindly scale everything. An LLM agent analyzes the situation: "Traffic is up 150%, but it's mostly on product pages, not checkout. Cart abandonment is normal, payment success rate is good, database queries show heavy read activity but light writes. Conclusion: scale read replicas and CDN, but don't touch write database or payment services yet, they're handling load fine and scaling them risks introducing instability during peak revenue time."

That kind of contextual reasoning prevents over-reactions that cause more problems than they solve.

Architecture Patterns for Resilient High-Load Systems

Let me show you the actual architectural patterns that survive holiday peaks. These aren't theoretical, they're battle-tested during billions of dollars in transaction volume.

Pattern 1: The Predictive Scaling Architecture

Component Breakdown

- Time-Series Forecasting Layer: Machine learning models (Prophet, LSTM networks) analyze 2-3 years of historical data including previous holiday seasons, generating hourly traffic forecasts 72 hours ahead with 85-90% accuracy.

- Real-Time Correction Engine: Compares actual traffic against forecasts continuously, applying corrections to future predictions. If morning traffic exceeds forecast by 20%, afternoon predictions automatically adjust upward.

- Pre-Scaling Orchestration: Based on predictions, begins provisioning resources 15-30 minutes before anticipated surges. Servers are booted, warmed up, health-checked, and added to load balancers before traffic hits.

- Result: Zero cold-start issues, smooth handling of 3-5x traffic increases, 40% lower infrastructure costs than reactive scaling.

Pattern 2: The Tiered Degradation Architecture

How It Works

- Service Classification: All services classified into tiers — critical (checkout, payment), important (search, product pages), nice-to-have (recommendations, reviews), and optional (social features, comparisons).

- Progressive Shedding: As system load increases, AI automatically degrades optional features first, then nice-to-have, preserving critical functions. Users get slightly simpler experiences but never see errors or failures.

- Automatic Recovery: Once load decreases, features automatically restore in reverse order. No manual intervention needed.

- Result: System remains stable under extreme load, maintains revenue-generating functions, dramatically reduces crash risk.

Pattern 3: The Geographic Load Shifting Architecture

Implementation

- Multi-Region Active-Active: Full application stack deployed across 3-5 geographic regions (US East, US West, Europe, Asia Pacific), all serving traffic simultaneously.

- Intelligent Traffic Routing: AI-powered global load balancer doesn't just route based on geography, it considers regional capacity, current load, network conditions, and even predicted future load when deciding where to send each request.

- Elastic Regional Scaling: When one region approaches capacity, traffic gradually shifts to other regions with available capacity while that region scales up. Users never notice the geographic shift.

- Result: Effective capacity is sum of all regions, no single region becomes a bottleneck, traffic distributes optimally across global infrastructure.

What Actually Goes Wrong During Holiday Peaks

Let me share some real-world failure scenarios I've seen (and helped prevent) during holiday seasons. Understanding these helps you avoid repeating them.

Case Study 1: The Database Connection Pool Exhaustion

What Happened: Major retailer's site crashed 30 minutes into Black Friday despite having "plenty" of server capacity. Problem? Application servers were scaling perfectly, but nobody increased the database connection pool limits. 500 servers all trying to share 200 database connections = instant deadlock.

The AI Solution: AI anomaly detection spotted the pattern developing—application server count rising, database connections maxed out, growing queue of waiting requests. Automatically increased connection pool limits proportional to application server count. Crisis averted before customer impact.

Case Study 2: The CDN Cache Stampede

What Happened: E-commerce site promoted specific products heavily. When traffic surged, everyone requested the same product pages simultaneously. CDN cache had expired on those exact pages (Murphy's Law). Result: thousands of simultaneous requests hit origin servers, overwhelming them instantly.

The AI Solution: Predictive auto-scaling ML analyzed marketing campaigns and social media mentions, identified which products would be hot, pre-cached them across all edge locations with extended TTLs before the surge. Origin servers handled less than 5% of traffic.

Case Study 3: The Payment Gateway Bottleneck

What Happened: Travel booking site scaled everything perfectly, except they forgot payment processing is often handled by third-party services with rate limits. When bookings surged, payment gateway started throttling requests, causing timeouts and angry customers.

The AI Solution: Infrastructure automation detected payment gateway response times increasing, automatically implemented request queuing and retry logic, spread payment processing over longer time window, and contacted backup payment providers. Converted potential disaster into minor slowdown.

AIOps: The Orchestration Layer

AIOps (Artificial Intelligence for IT Operations) represents the orchestration layer tying everything together, monitoring, prediction, scaling, and remediation all working in concert.

The AIOps Feedback Loop

- Continuous Monitoring: Collecting metrics from every layer: application, infrastructure, network, user experience

- Pattern Recognition: Machine learning identifying normal patterns, anomalies, and early warning signs

- Predictive Analytics: Forecasting future states based on current trends and historical patterns

- Automated Remediation: Taking corrective action without human intervention when confidence is high

- Human Escalation: Alerting engineers with full context when situations require human judgment

- Continuous Learning: Every incident, action, and outcome feeds back into models, improving future responses

The ROI of AIOps During Holidays

Let's talk numbers. Implementing comprehensive AIOps for holiday traffic management typically costs $200,000-$500,000 for enterprise-scale deployments (software, integration, training). But consider the returns:

- Downtime prevention: One hour of downtime during Black Friday costs retailers $500,000-$2 million. AIOps reduces unplanned downtime by 70-80%

- Infrastructure optimization: 30-40% reduction in cloud spending through right-sizing and predictive scaling

- Engineering efficiency: 60% reduction in time spent firefighting, allowing engineers to focus on revenue-generating features

- Customer satisfaction: Improved performance leads to higher conversion rates (every 100ms improvement = 1% conversion increase)

Most organizations achieve full ROI within a single holiday season.

Building Your Holiday-Ready Architecture

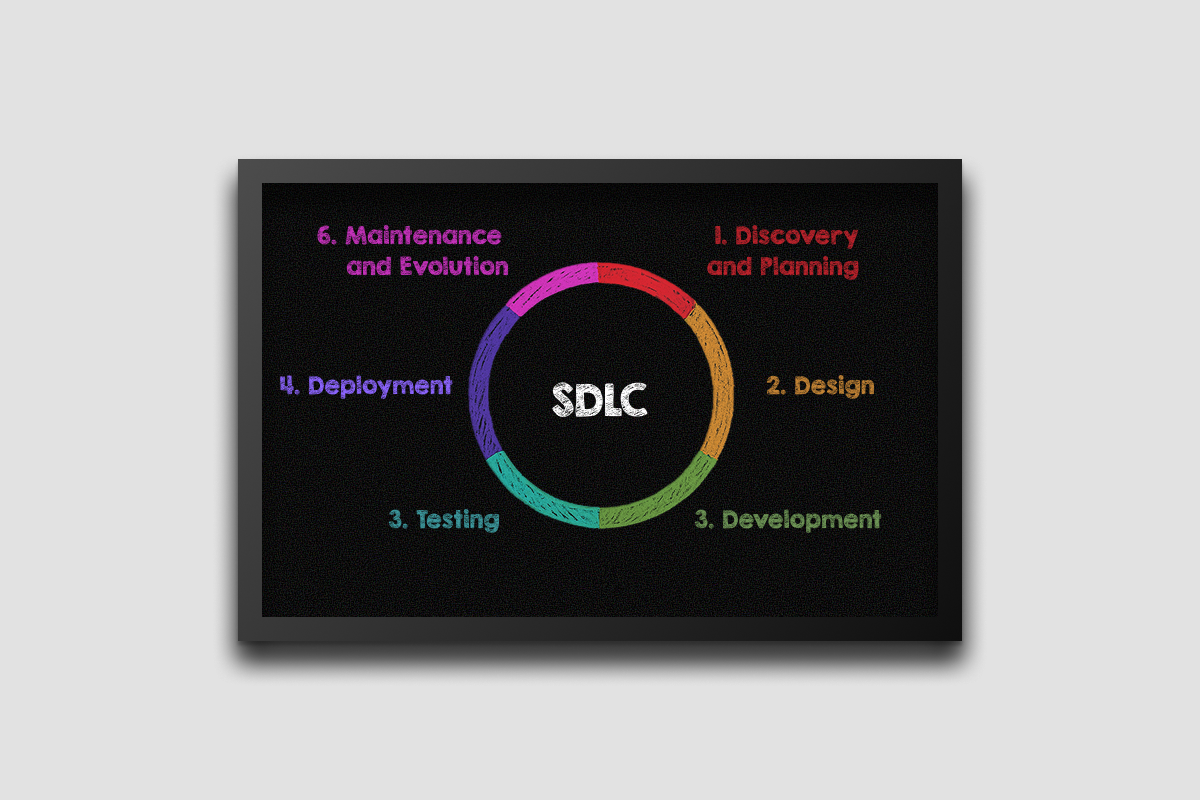

Ready to implement these patterns? Here's a practical roadmap for building resilient architecture that survives holiday peaks:

Phase 1: Observability Foundation (2-3 months before peak)

- Implement comprehensive monitoring across all infrastructure layers;

- Set up distributed tracing to understand request flows end-to-end;

- Establish baseline performance metrics for normal traffic;

- Deploy real user monitoring (RUM) to understand actual customer experience;

- Create dashboards showing key business metrics alongside technical metrics.

Phase 2: Predictive Modeling (6-8 weeks before peak)

- Gather historical data from previous holiday seasons;

- Train machine learning models on traffic patterns, user behavior, conversion rates;

- Generate hour-by-hour forecasts for upcoming holiday period;

- Validate predictions against holdout data from previous years;

- Establish confidence intervals and plan for worst-case scenarios.

Phase 3: Infrastructure Preparation (4-6 weeks before peak)

- Implement predictive auto-scaling ML based on forecasts, not just reactive metrics;

- Configure load balancer health checks and traffic distribution rules;

- Pre-warm CDN caches with predicted popular content;

- Establish database read replicas and connection pool policies;

- Deploy chaos engineering tests to validate resilience.

Phase 4: AI Agent Deployment (2-4 weeks before peak)

- Deploy AI anomaly detection systems with holiday-specific baselines;

- Configure LLM-powered agents for autonomous infrastructure management;

- Set up automated remediation playbooks for common failure scenarios;

- Establish escalation paths when AI confidence is low or situations are novel;

- Run tabletop exercises simulating various failure scenarios.

Phase 5: Live Operation & Learning

- Monitor AI predictions versus actual traffic continuously;

- Allow AI systems to operate autonomously where confidence is high;

- Manually intervene only when necessary (and document for AI learning);

- Capture all metrics, decisions, and outcomes for post-season analysis;

- Conduct thorough post-mortem to improve next year's models.

Performance Optimization Through AI

Performance optimization during holiday periods isn't just about handling more load, it's about delivering better experiences under pressure. AI enables optimizations impossible for humans to manage manually:

Dynamic Query Optimization

AI analyzes which database queries slow down under load, automatically creates temporary indexes for holiday-specific query patterns, adjusts query execution plans based on current data distribution, and even rewrites problematic queries on the fly.

Intelligent Caching Strategies

Instead of static cache rules, AI determines optimal cache TTLs based on content type, update frequency, traffic patterns, and business importance. Product pages for hot items get cached longer; checkout pages get shorter TTLs to ensure fresh inventory data.

Adaptive Asset Delivery

AI monitors network conditions and device capabilities, serving appropriately sized images — high-res to fast connections, compressed to mobile users on cellular. This balances visual quality with load times, optimizing both experience and infrastructure usage.

Predictive Preloading

Machine learning predicts which pages users will visit next based on browsing patterns, preloading resources in the background. Users experience instant page transitions even under heavy load because content is already cached locally.

The Technology Stack for AI-Driven Load Management

Let's get specific about the actual technologies powering AI peak load management:

Monitoring & Observability

- Datadog, New Relic, Dynatrace: APM platforms with AI-powered anomaly detection;

- Prometheus + Grafana: Open-source monitoring with ML alerting via tools like Grafana IRM;

- Elastic Observability: Logs, metrics, and traces with machine learning anomaly detection;

- Splunk: Log analytics with predictive capabilities through Splunk ITSI.

Predictive Scaling & Capacity Planning

- AWS Predictive Scaling: Native AWS feature using machine learning for EC2 auto-scaling;

- Google Cloud AI-powered scaling: Integrated with GKE for container orchestration;

- Azure Autoscale: ML-enhanced scaling for Azure resources;

- Kubernetes KEDA: Event-driven autoscaling with custom metrics and predictions.

Load Balancing & Traffic Management

- NGINX Plus: Advanced load balancing with API-driven dynamic configuration;

- HAProxy: High-performance load balancing with runtime API;

- Envoy Proxy: Modern proxy with sophisticated traffic management capabilities;

- Cloudflare Load Balancing: Global load balancing with AI-driven traffic steering.

AIOps Platforms

- Moogsoft: AI-driven incident management and correlation;

- BigPanda: Event correlation and automated remediation;

- PagerDuty AIOps: Incident intelligence with machine learning;

- IBM Watson AIOps: Enterprise-grade AI for IT operations.

Future Trends: Where AI Infrastructure Is Heading

Looking ahead, several emerging technologies will further transform how we handle seasonal traffic:

Self-Healing Infrastructure

Future systems won't just detect and scale, they'll automatically repair themselves. AI will identify degraded components, provision replacements, migrate workloads, and restore full functionality without human awareness of the issue.

Quantum-Inspired Optimization

Quantum computing algorithms (even on classical computers) will solve complex resource allocation problems impossible for traditional optimization. Imagine perfectly distributing workload across thousands of servers in milliseconds.

Edge AI for Microsecond Decisions

Instead of centralized AI making decisions, edge nodes will run local AI models making traffic routing decisions in microseconds based on real-time conditions, too fast for centralized systems to react.

Autonomous Infrastructure Ecosystems

Multiple AI agents will collaborate, one managing compute, another databases, another CDN, another security—negotiating optimal configurations through multi-agent reinforcement learning. The system evolves strategies humans never imagined.

Key Takeaways for Holiday Success

If you remember nothing else from this article, remember these critical points about handling holiday traffic surges:

- Prediction beats reaction: Predictive auto-scaling ML starts provisioning resources before surges hit, preventing cold-start problems that crash reactive systems;

- Behavior matters more than metrics: AI anomaly detection analyzing user behavior patterns predicts problems 15-30 minutes before infrastructure metrics show issues;

- Context enables intelligence: LLM-powered agents making reasoned decisions based on full system context outperform rigid rule-based automation;

- Graceful degradation beats heroic recovery: Planned feature reduction under load keeps core functionality working versus catastrophic failure requiring emergency fixes;

- Cost optimization and reliability aren't trade-offs: AI-driven capacity planning reduces costs 30-40% while improving reliability through precise, targeted scaling.

Wrapping Up: The AI Infrastructure Imperative

Retailers who captured Black Friday revenue weren’t those with the largest infrastructure budgets, they had the smartest infrastructure. AI peak load management turns holiday operations from reactive firefighting into proactive orchestration.

Traffic surges of 205%, 11.4 orders per second, and millions per minute are no longer edge cases, they’re the norm. Predictive auto-scaling, anomaly detection, and LLM-powered agents are the foundation separating success from failure during peak demand.

Holiday peaks won’t wait. The question isn’t whether AI will transform infrastructure operations — it already has. The question is whether you’ll adopt it before your next peak season, or learn its value the expensive way.