Drop us a line

If you are interested in the development of a custom solution — send us the message and we'll schedule a talk about it.

It is commonly known that the underwriting process, which is in the core of insurance operations, requires a lot of expertise, effort, and should be reliable and quick at the same time. Until recently it was considered to be quite tricky to meet these requirements.

With machine learning tools and computing capacities steadily improving, this has now become a reality. For one of our clients in the insurance carrier segment, Azati has recently implemented a digital assistant for underwriters that significantly helped with automating the underwriting decision making process.

The purpose of the assistant is to let decision making become simple, quick and efficient. The implemented machine learning based predictive model processes newly submitted policy applications and calculates their scores, which then get interpreted into a human understandable form of a recommendation to approve, decline or manually review the application, accompanied by statistics on the historical effectiveness of similar decisions for the similar segment of applications in the past – providing the underwriter with the required data to make a quick informed decision. The mechanics of the process are described in the technical section below. The predictive models themselves were trained based on the historical data consisting of the application submissions, underwriting decisions, kick-out rules, corresponding policies and related claims. The subsequent implementation phase resulted in full automation of underwriting for a sizable amount of new submissions.

The cons of the old school underwriting process:

Accordingly, the “old way” can be enhanced by the following aspects:

These considerations and assessments laid the foundation of the policy application decision assistant.

Each newly submitted application, processed by the digital assistant, can be classified as

There is also an additional panel with statistical assessment of the effectiveness of similar decisions for the similar segment of applications over time, which is outside of the bounds of this article.

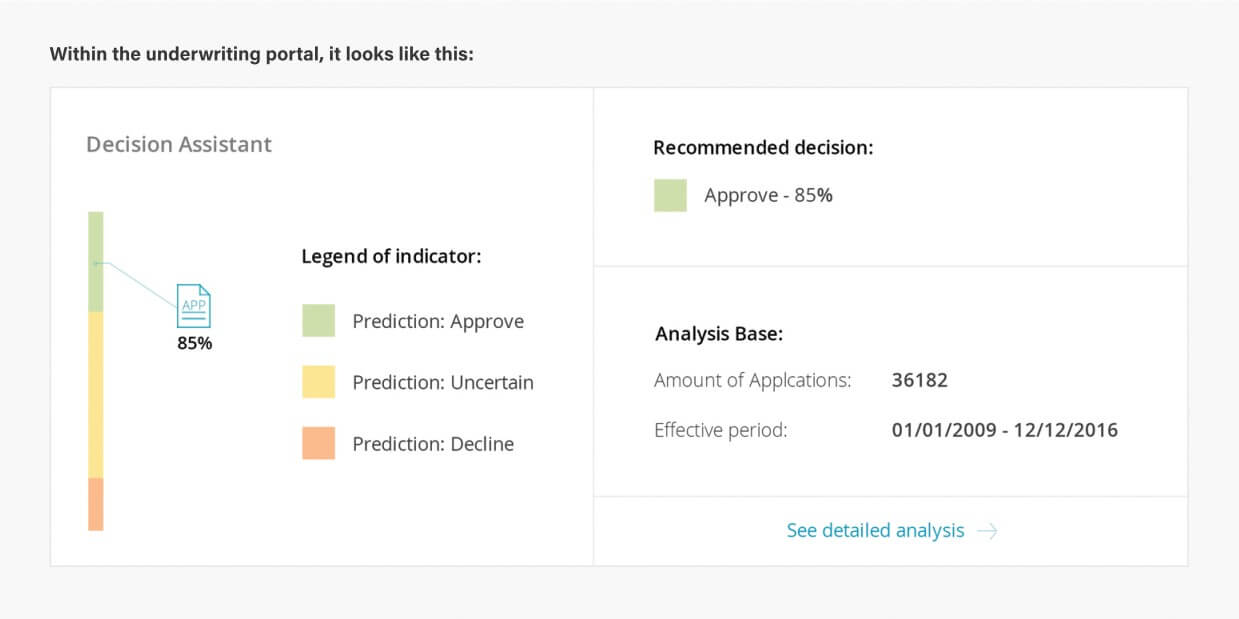

As a result of assessing the newly submitted policy application, the system assigns a score to it, which indicates the probability of that application being approved or declined. The system administrator defines the interpretation of the resulting scores by specifying the thresholds of the score zones – “ready to be approved” (green zone), “to be reviewed further” (yellow zone) and “high probability to be declined” (orange zone).

The thresholds are configurable: the administrator can decide to promote more apps for approval with a bit greater risk, or tighten up the risk and promote a smaller pool of apps for approval. For our client, the thresholds were set differently depending on the line of business and the comfort level of the decision makers.

The four examples below illustrate how changing the thresholds affects the accuracy of decision prediction and the volume of applications falling into each score zone.

There is also an additional panel with statistical assessment of the effectiveness of similar decisions for the similar segment of applications over time, which is outside of the bounds of this article.

Example 1 — Approval (green) zone adjustment

If the “green” zone ranges from 50% to 100% (which means that about a half of all new submissions are expected to be approved without any additional review), then out of all apps which turned out to be declined by human underwriters, the digital assistant was able to detect 99.5% of them, classifying them into the yellow and orange zones, depending on relevant historical data, letting about 0.5% of actually declined applications into the “green” zone – within acceptable parameters on the historical loss statistics.

Thus the quality of recommendation is high, which allows auto-approving a reasonable amount of new application submissions (about a half) with acceptably low risk.

Example 2 — Approval (green) zone adjustment

If the “green” zone ranges from 25% to 100%, then the digital assistant would be able to detect about 94-95% of applications which turned out to be declined by human underwriters, letting about 5-6% of actually declined applications into the “green” zone – once again, within acceptable parameters on the historical loss statistics.

Thus the quality of recommendation is technically lower than that in the Example 1, however this allows auto-approving a much larger number of new application submissions with a bit higher risk, but within acceptable parameters.

Example 3 — Decline (orange) zone adjustment

If the “orange” zone ranges from 0% to 10% (which means that about 10% of all new submissions are expected to be high risk), then the digital assistant would be able to detect 80% of applications which turned out to be declined by human underwriters, classifying them in the orange “high risk” zone (and the remaining 20% – in the “manual review required” yellow zone). At the same time, this group of actually declined apps will represent only about a half of all applications classified into the orange zone, whereas the other half of the orange zone will be applications which turned out to be approved by human underwriters.

Thus the “high risk” application detection is reasonably accurate (the system detected 80% of actually declined apps), but at the same time a fairly large volume of “normal” apps may get improperly tagged as “high risk”.

Example 4 — Decline (orange) zone adjustment

If the “orange” zone ranges from 0% to 5%, then the digital assistant would be able to detect 60% of applications which turned out to be declined by human underwriters, classifying them in the orange “high risk” zone (and the remaining 40% – in the “manual review required” yellow zone). However this time, these 60% of detected actually declined apps will represent 85% of all applications in the orange zone. The remaining 1/4 of applications in the orange zone will be apps that got approved by human underwriters.

Thus the “high risk” application detection is less accurate (the system detected 60% of actually declined apps), but at the same time the orange pool of applications now contains truly “high risk” applications.

The training data consisted of -140K of applications for each specific product, with ~10K of them known to be declined by underwriters. During the process of training, the algorithm received the following information:

As a result of the training, the system learned to make approval decision predictions on the new application submissions.

Applications (xml) Underwriters’ Quote “Raw” input data

Set of features:

Note: > 1000 features were found

Recursive feature elimination

(RFECV algorithm)

Note: -300 features remained

Classifier: decision trees ensemble Note: XGBoost algorithm used

In order to measure the algorithm performance, our algorithm uses ROC AUC metric.

The given dataset is highly imbalanced, as the number of applications to approve outnumbers the number of those to decline – the latter accounts for only ~7% of all. When being confronted with the class imbalance problem, commonly one of the two metrics is used:

The following comparison between ROC AUC and PR AUC explains why ROC AUC is more suitable in our case. The desired quality indicators are:

should be high;

should be high; should be as low as possible.

should be as low as possible.TP- number of true positives. FP- number of false positives. TN- number of true negatives. FN- number of false negatives.

Flere we consider application rejection as positive result, while negative result corresponds to application approval ROC AUC depends on the following factors:

For our case  is an acceptable approximation of

is an acceptable approximation of  . As TP+FN covers about 7% of all of the results, the TPand FNcan be neglected, Also, FNwill be very low in the case of high recall (99%).

. As TP+FN covers about 7% of all of the results, the TPand FNcan be neglected, Also, FNwill be very low in the case of high recall (99%).

As for PR AUC, it depends on the following factors:

;

; .

.This metric has the same meaning of recall as ROC AUC, so the only difference is precision. This metric has the same meaning of recall as ROC AUC, so the only difference is precision

Therefore, ROC AUC metric was chosen to assess the algorithm’s quality, we used stratified 10-fold cross-validation. For our algorithm, we got ROC AUC=0,955 (95,5%), which is regarded to be a very high metric value.

The insurance company of our client was challenged with bottlenecks within the policy underwriting process, which was strongly dependent on manual decision making. Less than 30% of new application submissions went “straight-through” (i.e. with no human interaction on the carrier side during policy underwriting), being processed by the legacy rule-based algorithm. The company wanted to boost straight-through processing for new policy applications, allowing their underwriters to focus on high value and non-typical cases.

After running the digital assistant in manual mode (only helping human underwriters to make informed decisions without actually making them automatically) for about 4 months, it proved to show outstanding results. The client company decided to adopt the system and automatically approve the new submissions falling into the “green zone”. Even though the accuracy thresholds were set quite conservatively, this has resulted in:

If you are interested in the development of a custom solution — send us the message and we'll schedule a talk about it.

JavaScript, Ruby

HR Planning SoftwareThe customer asked Azati to audit the existing solution in terms of general performance to create a roadmap of future improvements. Our team also increased application performance and delivered several new features.

Python

Stock Market Trend Discovery with Machine LearningAt Azati Labs, our engineers developed an AI-powered prototype of a tool that can spot a stock market trend. Online trading applications may use this information to calculate the actual stock market price change.

Python

Semantic Search Engine for Bioinformatics CompanyAzati designed and developed a semantic search engine powered by machine learning. It extracts the actual meaning from the search query and looks for the most relevant results across huge scientific datasets.

Java, JavaScript

E-health Web Portal for International Software IntegratorAzati helped a well-known software integrator to eliminate legacy code, rebuild a complex web application, and fix the majority of mission-critical bugs.

JavaScript, Ruby

Custom Platform for Logistics and Goods TransportationAzati helped a European startup to create a custom logistics platform. It helps shippers to track goods in a real-time, as well as guarantees that the buyer will receive the product in a perfect condition.